Bloomberg [1] estimates that the SaaS market will grow to over $600 billion by 2023, quadrupling its 2020 levels. This is an incentive for many software vendors to create SaaS offerings in the Cloud for products previously operated by customers in-house. The vendor need to address a very specific problem additionally to technical modernization and building operational expertise on this journey: how to deal with loyal customers who cannot shift operations of the product to the cloud? There can be various reasons for this:

The product is used in business areas subject to strict regulations, forcing the customer to operate in-house.

The customer has invested heavily in on-premises infrastructure and is waiting for payback before moving to SaaS.

The product must be co-located with other systems of the customer because of low-latency requirements in the integration.

The product handles large amounts of data that the customer has not yet migrated to the cloud.

The software vendor has several options to deal with this problem:

The existing product is further developed in parallel with the SaaS offering. Both variants will be advertised as one product. The added value of the SaaS solution from a customer’s perspective is merely the outsourcing of operations.

The existing product enters a sunset phase. This means active development is discontinued, and the product only receives security updates and support for a fixed length of time. The SaaS offering can be created from scratch.

The SaaS offering becomes the software vendor’s strategic focus and is expected to be responsible for the main revenue in the future. The existing product becomes the SaaS offering’s core. Technical architecture and deployment model are chosen to enable operations of the core product outside the Cloud.

In the first case, the software vendor needs to either increase its development efforts to support two deployment models or sell a managed hosting offer as SaaS. Advantages of modern microservices architectures, such as shorter release cycles and more efficient operations, are often lost in this case. Functional parity between licensed product and SaaS offering is hard to maintain long term. For example, cloud providers offer services in the area of artificial intelligence and machine learning (AI/ML) that can only be implemented with considerable effort by software vendors themselves. Such services often cannot be operated cost-effectively in single-tenant environments. Software vendors are free to integrate Cloud-based services in the SaaS variant to implement innovative use cases.

Learn more about Serverless Architecture ConferenceSTAY TUNED!

If software vendors opt for the second option, they have the most flexibility regarding architecture and functional design of the software. However, they risk losing loyal customers and may suffer major revenue losses as a result. It is important to clarify with customers in advance whether a switch to a SaaS offering is realistic and to plan the transition period generously.

The third option therefore offers a tradeoff: self-hosting customers are given the option of continued operations on their own responsibility and under full control. At the same time, software vendors need not consider two technical architectures in development. For new use cases, they can decide on a case-by-case basis whether the functionality should (or can) be implemented in the core product. If the use case is developed only for the SaaS variant, software vendors have absolute liberty with regard to architecture and design.

Software vendors need to find suitable models for deployment and operations if they want to offer the core product outside the Cloud additionally to the SaaS offering. It needs to enable effective operations of a growing number of customers in the responsibility of the vendor as well as deployments on heterogeneous infrastructure in individual clients’ data centers by their own IT. Modernizations often additionally aim for improving development by enabling shorter release cycles of smaller change sets, product planing driven by insights from end-user-feedback, and automated provisioning of test and demo environments.

Containerized solutions are often chosen because they move many operational tasks related to provisioning and deployment to the build phase of development. They support microservices architectures and are therefore well suited for agile development. In addition, operations teams today often already have experience running container platforms. In particular, the open source system Kubernetes is widely adopted and can be deployed to a huge variety of hardware.

So does Kubernetes already solve the portability problem? Do software vendors only need to make their architecture compatible with Kubernetes in order to enable SaaS? Unfortunately, the answer to this question is “no.” The reason is the high degree of flexibility and rapid evolution of the Kubernetes project. APIs used today to describe a workload may already be gone with the next release. Moreover, administrators of a Kubernetes platform can add extensions or even replace platform standards. It is also not guaranteed that customers’ own operations teams are comfortable with and/or experienced enough to deploy and operate clusters for mission-critical applications despite the wide adoption of Kubernetes.

This two-part article discusses two platforms for running a public SaaS offering in the Cloud that also enables software vendors to support deployments of their products to customer-provided infrastructure:

Amazon Elastic Container Service (ECS) is a container platform developed by Amazon Web Services (AWS). It was designed with the goal of simplifying processes in the development, deployment and operations of containerized applications. As a result, ECS is particularly useful when software vendors want to minimize their operational overhead or have little experience with cloud-native developed applications or container platforms.

With Amazon Elastic Kubernetes Service (EKS), AWS offers a managed service for running Kubernetes applications. EKS is certified by the Cloud Native Compute Foundation (CNCF) and guarantees compatibility with the open source version of Kubernetes. EKS users have the flexibility that they are used to from Kubernetes to customize the cluster to their own needs.

With Amazon ECS Anywhere and Amazon EKS Anywhere, software vendors can run containerized solutions for ECS and EKS outside of the AWS Cloud and run some or all of their SaaS offering on customer hardware. We show how this can help software vendors deliver solutions for customers with requirements to operate the product on-premises. In both articles of this series, we limit discussion to the features of the Anywhere components. For details on ECS and EKS themselves, we refer the interested reader to the official documentation [2], [3].

In the remainder of this article, we will look at the architecture of ECS Anywhere in detail. We will also show approaches to deployment and operations of SaaS on customer-provided infrastructure. The follow-up article will cover EKS Anywhere.

Extend the cloud with your own hardware

Amazon ECS was initially released as container orchestration platform for AWS customers in 2014. The interface used to describe workloads is based on Docker Compose. In a collaboration between Docker and AWS, native ECS support is even integrated into the Docker Compose CLI [4].

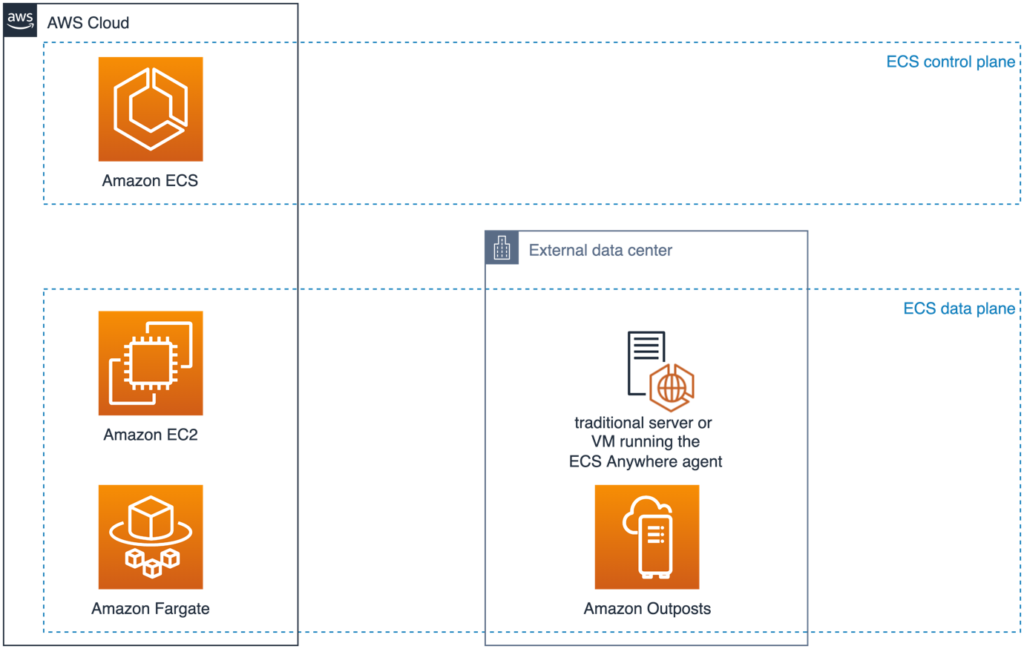

Like other container platforms, an ECS cluster is divided into a control plane and a data plane. The control plane performs management functions to orchestrate, configure, and control containerized applications. The data plane provides resources used to run workloads themselves.

ECS control plane has been designed to run in AWS from the start. It provides ECS users a very high level of integration with other AWS services. This includes, for example, AWS Identity and Access Management (IAM) for managing access rights to the ECS API, or Elastic Load Balancing (ELB) as an ingress to applications running in ECS. This tight integration means that ECS cannot be run without connection to the Cloud. For ECS Anywhere, this therefore results in the architecture of control and data plane seen in Figure 1.

Fig. 1: High-level architecture of Amazon ECS

The ECS Anywhere control plane is powered by the Cloud-based ECS control plane in the AWS region. Two runtime environments each are available for both data planes in the Cloud and in external data centers:

- Amazon EC2: virtual machines in the Cloud, administrated by the ECS user;

- Amazon Fargate: the serverless option to run containers and let AWS manage underlying infrastructure in the Cloud;

- Amazon Outposts: AWS-provided infrastructure with a subset of the AWS Cloud running on-premises, and

- Traditional servers and VMs: integrated via ECS Anywhere.

An ECS cluster can include any combination of runtime environments. Scheduler rules are used in order to selectively place workloads in a runtime environment.

The inner workings of an external compute node

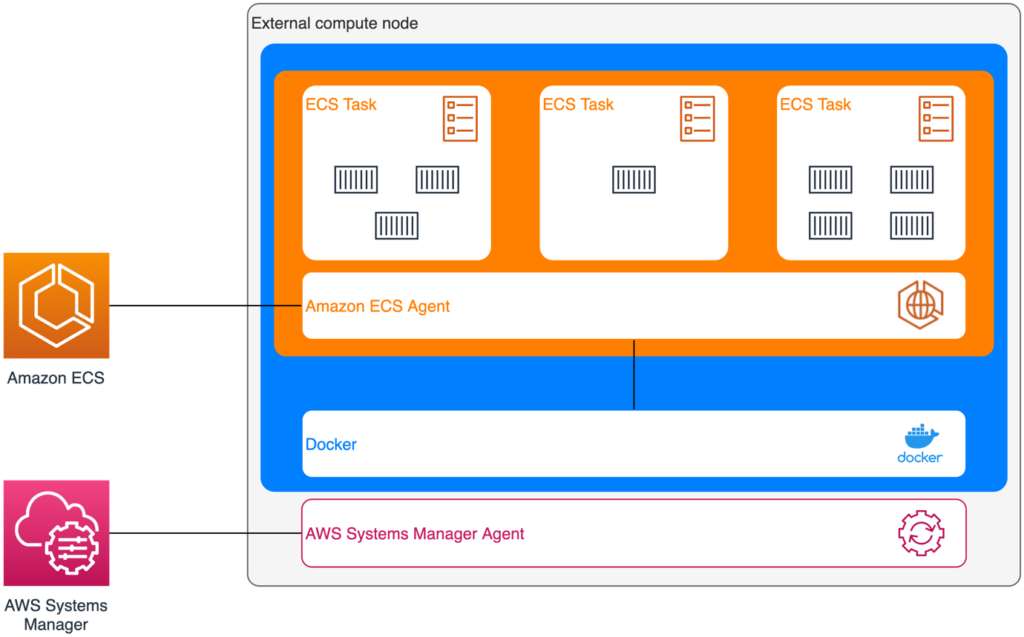

Almost any external compute infrastructure can be added to an ECS cluster with ECS Anywhere. With this, we standardize the API for deploying applications in and outside the Cloud. Since the control plane operates in the Cloud and is provided as a managed service, we focus on the architecture of external compute nodes (Fig. 2).

Fig. 2: An ECS Anywhere Node in detail

The requirements for an external compute node are a supported operating system (such as Ubuntu, CentOS, or SUSE—see [5] for a complete list) and an outgoing Internet connection. Two agents are installed on the desired server. First, the AWS Systems Manager agent is installed. During installation, the server registers with the AWS Systems Manager (SSM) and can then be configured and managed with it. Next, Docker and the Amazon ECS Container Agent are installed. The latter runs as a Docker container itself and handles communication between the Amazon ECS control plane and the local Docker daemon. This communication is TLS-encrypted via HTTPS and is initiated by the ECS Container Agent. Thus, the AWS API must be continuously reachable by the agent to maintain contact with the ECS control plane. Containers running in the data plane are not affected by disconnections between the agent and control plan and continue their work in case of disruptions. However, if control plane events such as scaling of tasks occur in the meantime, they cannot be executed until the connection is re-established. The official documentation [5] specifies the AWS API endpoint for firewall configuration.

Only information necessary for container management is exchanged between the external compute node and the ECS control plane. This includes, for example, the health and lifecycle information of nodes and tasks. The ECS Container Agent does not send any user data, such as the contents of the mounted volumes, to the control plane.

Access rights needs to be granted to the external compute node so that the agent can authenticate against the AWS API and communicate with the service in the Cloud. This is done using IAM roles. They can be managed with the SSM Agent in the same way as EC2 roles in the Cloud. The SSM Agent uses the server’s fingerprint to identify a server and provide credentials for the assigned role.

Be aware that external compute nodes are not AWS managed infrastructure. The responsibility of updating the operating system and agent remains with the customer and needs to be included in regular patch processes. The SSM agent can be updated with the AWS API conveniently [6]. As for the ECS container agent, AWS maintains ecs-init packages [7].

ECS Anywhere in Action

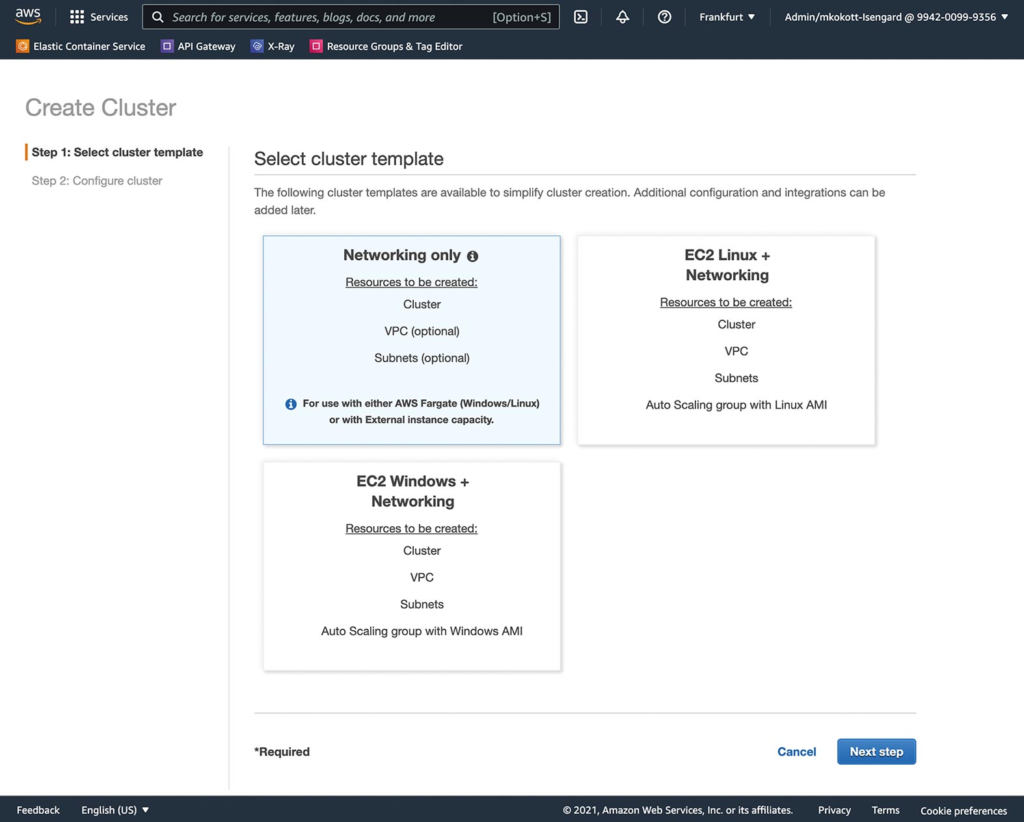

We will walk through the steps to add an Ubuntu 20.04 server as an external compute node to an ECS cluster To do this, we first need an ECS cluster, which we create via the AWS Management Console as follows. For our purposes, a cluster without compute capacity will do. We select the option to create a cluster with networking only (Fig. 3).

We choose a name in the second dialog and with that the cluster creation is already done. We now find the REGISTER EXTERNAL INSTANCES button for the newly created cluster under the ECS INSTANCES tab. It creates an IAM role for the external compute node and provides a shell command to register the node. It needs to be executed on the external compute node. The command loads the installation script that gets executed with individual parameters like the activation code and the cluster name (Listing 1).

Listing 1 curl --proto "https" -o "/tmp/ecs-anywhere-install.sh" \ "https://amazon-ecs-agent.s3.amazonaws.com/ecs-anywhere-install-latest.sh" && \ bash /tmp/ecs-anywhere-install.sh \ --region "eu-central-1" \ --cluster "ecs-anywhere-example" \ --activation-id "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxx" \ --activation-code "XXXXxxxxXXXXxxxxXXXX"

The script installs the SSM agent as a Linux service (Listing 2).

Listing 2 systemctl status amazon-ssm-agent amazon-ssm-agent.service - amazon-ssm-agent Loaded: loaded (/lib/systemd/system/amazon-ssm-agent.service; enabled; vendor preset: enabled) Active: active (running) since Tue 2021-10-26 10:47:39 UTC; 12min ago Main PID: 33931 (amazon-ssm-agen) Tasks: 21 (limit: 4435) CGroup: /system.slice/amazon-ssm-agent.service |–– 33931 /usr/bin/amazon-ssm-agent 33949 /usr/bin/ssm-agent-worker

It also installs Docker and starts the ECS Container Agent locally as a container (Listing 3).

Listing 3 docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 607c7fe20ef2 amazon/amazon-ecs-agent:latest "/agent" 11 minutes ago Up 11 minutes (healthy) ecs-agent

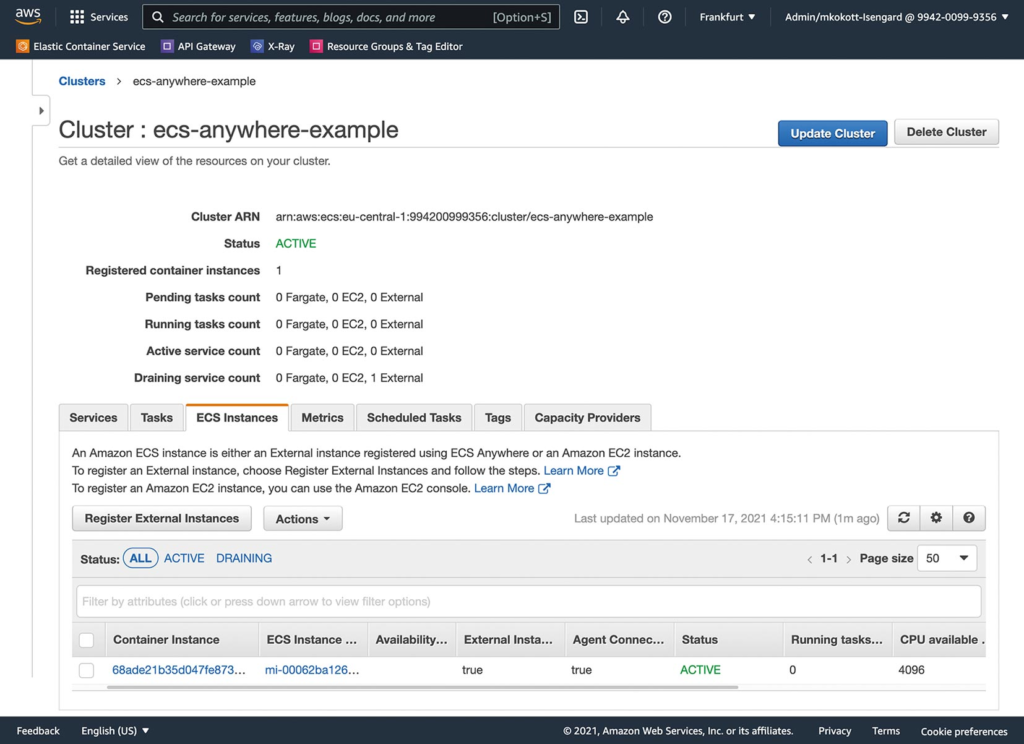

The new external compute node is now listed in the detailed view of the ECS cluster under the ECS INSTACES tab (Fig. 4).

Fig. 4: ECS cluster with external compute node

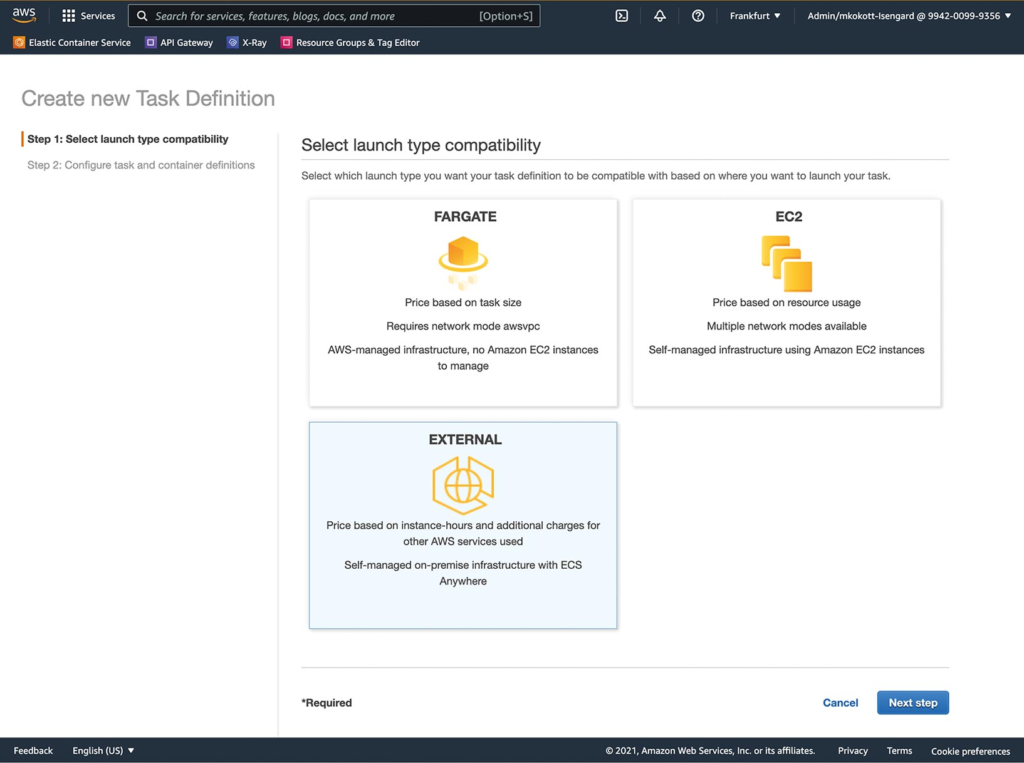

Now, ECS tasks can be executed on the external compute node in the ECS data plane. For this, the requiresCompatibilities parameter of the task needs to be set to EXTERNAL. This option is set as Launch Type in the dialog for registering a new task definition with the AWS Management Console (Fig. 5).

Fig. 5: Using the GUI to define ECS tasks for external compute nodes

Listing 4 shows the relevant parts of a task definition for a minimal WordPress installation to be executed on the external compute node.

Listing 4 requiresCompatibilities: - EXTERNAL containerDefinitions: - name: wordpress image: wordpress:latest memory: 256 cpu: 256 essential: true portMappings: - containerPort: 80 hostPort: 8080 protocol: tcp networkMode: bridge family: wp-anywhere

Networking from ECS Anywhere Tasks

Amazon ECS was designed with the goal of minimizing operational overhead. Customers focus on their application and outsource implementation details of technical components of the architecture to managed services. One of these components is load balancing of tasks. ECS uses the Elastic Load Balancing Service (ELB) to route incoming network traffic for a service to its associated containers. The ELB service is not available outside of AWS. And unlike Kubernetes, for instance, the component in the ECS control plane responsible for managing ELBs cannot be replaced with one for a different load balancer.

ECS is therefore particularly suitable if not the entire application, but only specific backend components needs to run outside the Cloud. Some customers running Big Data applications, for example, invested into own GPU-based hardware. These resources can be used with ECS Anywhere for the corresponding application components of a Cloud-based SaaS product [8].

Tasks running on external compute nodes need connectivity to the local network in most cases. ECS Anywhere supports three network modes: bridge, host, and none. Both modes bridge and host allow to make container ports externally available via the hosts’ network connection, while none disables external network connection for a container. The official ECS documentation [9] describes the different network modes in more detail. Customers can build individual solutions based on this to route traffic within their network to tasks running on external compute nodes. Such a solution can be as simple as configuring static IPs and ports of tasks in an existing load balancer.

Some use cases require connectivity between tasks running on external compute nodes and resources within an Amazon Virtual Private Cloud (VPC) in the Cloud. A VPC is a virtual network in an AWS account. It provides isolation of resources on the network level. If an external compute node is integrated into a VPC via a site-to-site VPN connection, tasks placed on it can not only reach resources such as databases running there. They can also be placed behind an ELB in the Cloud. This can be useful in hybrid scenarios when parts of an application in the Cloud need to initiate connections to tasks in ECS Anywhere. This blog post [10] explains this scenario in more detail.

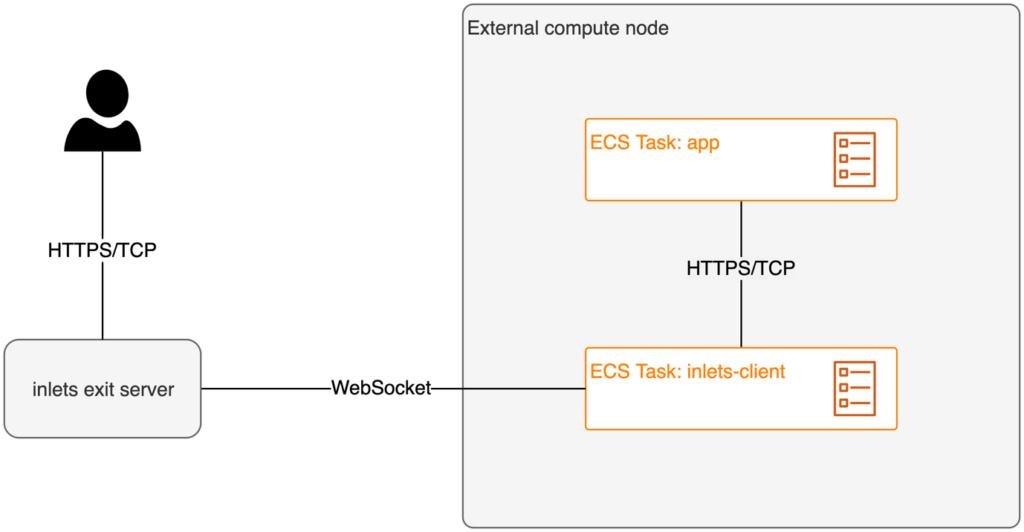

The use of inlets [11] is an option to make web apps running in ECS Anywhere externally available without static configuration of a load balancer. With the tool developed by OpenFaaS Ltd., connections to private resources can be established in a similar way as the communication between ECS Container Agent and ECS control plane is handled (Fig. 6).

Fig. 6: Ingress for ECS tasks via inlets

The tool consists of an inlets exit server and one or more inlets clients. The clients initiate an encrypted WebSocket connection to the exit server. This then receives the requests from the application’s users and forwards them over the WebSocket connection to a client on a node running the actual service. The client takes over the function of a reverse proxy and forwards the request to the locally running container’s corresponding port. The inlets exit server can run anywhere, as long as inlets clients can establish a network connection to it. You can find a setup in the context of ECS Anywhere on Nathan Peck’s blog [12].

Release and deployment of new versions

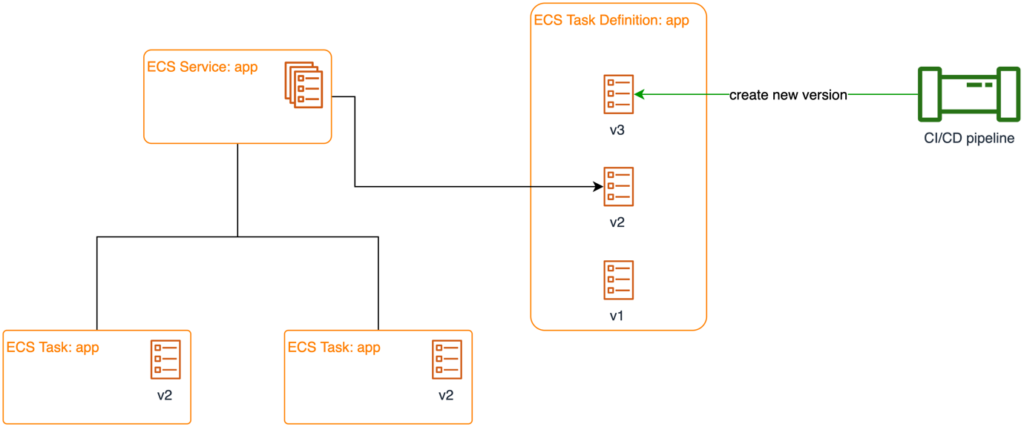

Controlled deployments of new versions and the ability to roll back in case of failure are vital for smooth operations of SaaS applications. ECS offers two components that support software vendors in their release and deployment process: task definitions and services. Task definitions contain container images and configuration of applications and are maintained in revisions. This means that a new task definition version is created for every change (such as updating a container image) Old versions of the same task definition remain available for rollbacks. A new revision will not affect the running instances in a cluster unless it gets deployed. This means that software vendors can push a release into a customer’s runtime environment regardless of maintenance windows or other constraints (Fig. 7).

Fig. 7: Release process with ECS

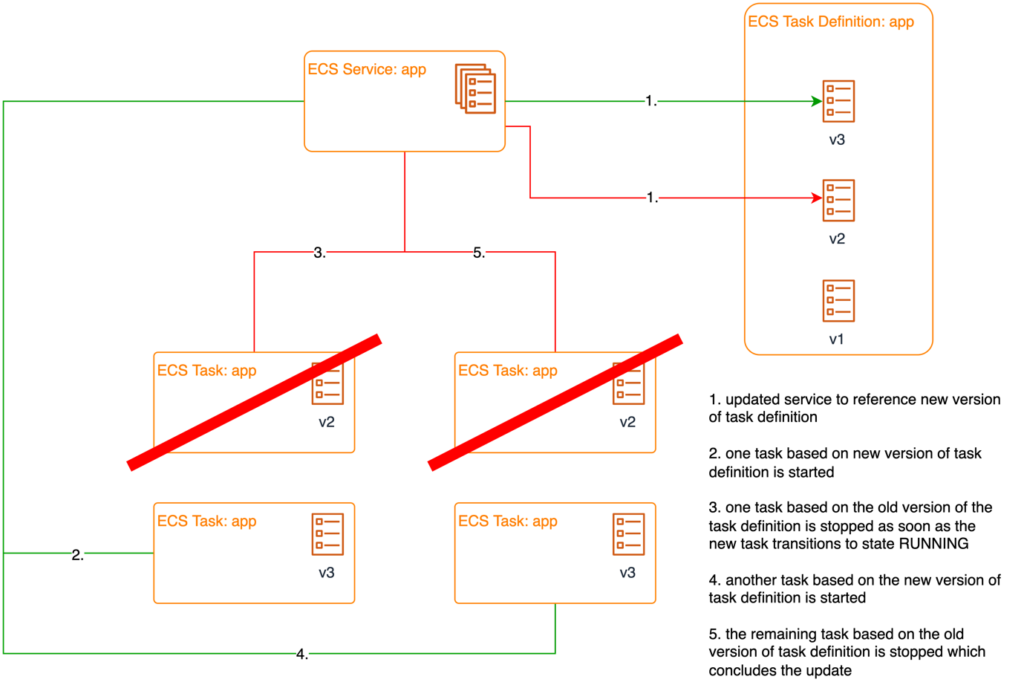

The construct of a service is used in ECS to roll out new versions of tasks. It is responsible for monitoring the state of tasks assigned to it. It replaces tasks if errors occur, for example. It also starts new instances if auto-scaling is activated and the load exceeds a certain threshold. The deployment configuration in the service specifies how new deployments will be performed. By default, it uses a rolling upgrade. This means that new tasks are started in parallel. The service waits for new tasks entering the RUNNING state before old tasks are stopped.

Fig. 8: Deploying a new version with ECS

Rollbacks are also very easy because task definitions are versioned and deployment control is handled by the service construct. Services get simply reconfigured for a rollback and point back to previous versions. Failed updates are a common reason for this. ECS provides a deployment circuit breaker option to automatically perform rollbacks for any failed service update [13].

Monitoring in ECS

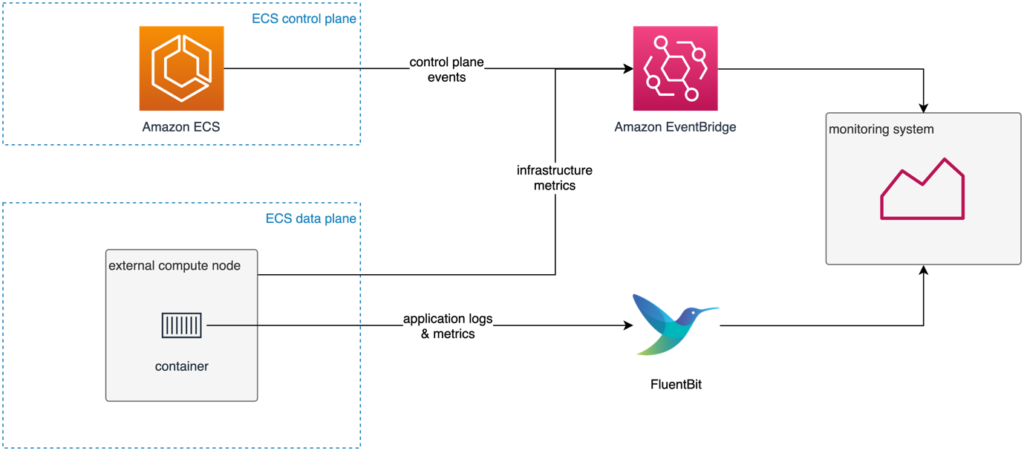

Finally, let us discuss one last important component in any IT operational concept: monitoring of the system. Logs, metrics, and events from different sources must be collected centrally to evaluate the system’s overall health.

ECS takes care of lifecycle tasks such as restarting failed tasks. As a result, relevant ECS control plane events [14] should be filtered with Amazon EventBridge and forwarded to the monitoring system. Control plane events can show missing CPU and RAM resources in the data plane or connection failures between control and data plane.

The compute node’s performance metrics can also be captured with EventBridge. For this, the CloudWatch Agent is installed on the external compute node [15]. This agent sends metrics such as CPU load, memory consumption, or network usage to CloudWatch.

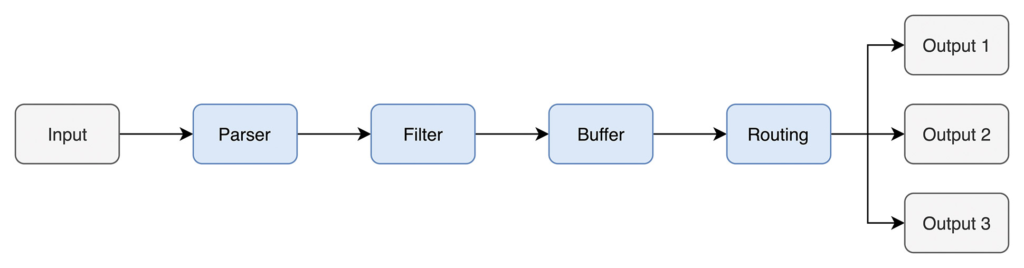

Metrics and logs from the application are another important building block. Fluent Bit [16] is a common solution for routing container logs and metrics to central logging systems. Fluent Bit defines a data pipeline to individually process log events (Fig. 9).

Fig. 9: The data pipeline for logstreams in Fluent Bit

The data pipeline uses input plug-ins to generate event streams from different sources, such as log files or the system journal. Parsers are used to make further processing in the pipeline efficient. These convert unstructured strings into structured objects with key-value pairs. Then, filters are used to manage events in the data stream. The events can be enriched by a filter with context like the runtime environment’s metadata. After preprocessing is complete, the events are stored in a buffer to prevent any data loss. During routing, a rule-based decision is made which data sinks must receive an event. Lastly, the events are written to the data sinks via output plug-ins. Currently, Fluent Bit offers over 70 output plug-ins, which allows events to be sent to Prometheus, DataDog, or Kafka, for example.

For AWS container services—and thus, for ECS Anywhere—AWS FireLens and the AWS for Fluent Bit container image are convenient ways of integrating Fluent Bit into a workload. Fluent Bit can be configured directly in the task definition of ECS tasks [17], which then automatically enriches events with ECS-specific metadata.

The chart in Figure 10 shows the flow of log events and metrics into the centralized monitoring system.

Fig. 10: Data flows for monitoring ECS Anywhere workloads

Learn more about Serverless Architecture ConferenceSTAY TUNED!

Conclusion

That concludes this article. We have discussed situations where software vendors face the challenge of deploying their SaaS solution partially or fully on customer-operated infrastructure. With ECS Anywhere, we took a look at a container platform that can help standardize deployment and operations between cloud and on-premises. In the next article, we will see how the Kubernetes service EKS Anywhere differs from ECS Anywhere.

Links & Literature

[1] https://www.bloomberg.com/press-releases/2020-07-30/software-as-a-service-saas-market-could-exceed-600-billion-by-2023

[2] https://docs.aws.amazon.com/AmazonECS/latest/developerguide/Welcome.html

[3] https://docs.aws.amazon.com/eks/latest/userguide/what-is-eks.html

[4] https://docs.docker.com/cloud/ecs-integration/

[5] https://docs.aws.amazon.com/AmazonECS/latest/developerguide/ecs-anywhere.html

[6] https://docs.aws.amazon.com/systems-manager/latest/userguide/ssm-agent-automatic-updates.html

[7] https://docs.aws.amazon.com/AmazonECS/latest/developerguide/ecs-anywhere-updates.html

[8] https://aws.amazon.com/blogs/containers/running-gpu-based-container-applications-with-amazon-ecs-anywhere/

[9] https://docs.aws.amazon.com/AmazonECS/latest/bestpracticesguide/networking-networkmode.html

[10] https://aws.amazon.com/blogs/containers/building-an-amazon-ecs-anywhere-home-lab-with-amazon-vpc-network-connectivity/

[11] https://github.com/inlets/inletsctl

[12] https://nathanpeck.com/ingress-to-ecs-anywhere-from-anywhere-using-inlets/

[13] https://aws.amazon.com/blogs/containers/announcing-amazon-ecs-deployment-circuit-breaker/

[14] https://docs.aws.amazon.com/AmazonECS/latest/developerguide/ecs_cwe_events.html

[15] https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/install-CloudWatch-Agent-commandline-fleet.html

[16] https://fluentbit.io

[17] https://docs.aws.amazon.com/AmazonECS/latest/developerguide/using_firelens.html