Microservices have been on everyone’s mind for a number of years now. There has been a heated debate about their advantages, but also the disadvantages associated with them. If one decides to take a microservices approach, the question of the technological solution stack arises relatively quickly. With Serverless and Functions as a Service, an alternative way to implement them has existed for a relatively short time.

Learn more about Serverless Architecture ConferenceSTAY TUNED!

Microservices – but pragmatic

Without wanting to celebrate a complete treatise on microservices here – I would like to discuss some of the central principles for successful microservices architectures, in order to be able to make the transition to the serverless world. The four most important ones for me from an architectural point of view are:

- Single responsibility: A microservice does one (business) thing and does it right. It is a fine art to tailor microservices correctly and to find the balance between responsibility and complexity.

- Isolation: A microservice is isolated from other services. This requires physical separation and the possibility to communicate with the microservice via well-defined and technology-independent interfaces. In addition, it sometimes makes sense to use a special technology within a microservice in order to implement use cases optimally – the choice of technologies per microservice should be guaranteed via isolation.

- Autonomy: The supreme discipline in the design of microservices architectures – each service has its own data storage and does not share a database or similar with other services. Implementing this principle consistently means decoupling the services to a large extent – but at a price.

Probably the easiest way for you as a reader to get an idea of the microservices principles in a serverless world is with a small example. So let’s take a look at one. The sample application is also available with complete source code in a GitHub repository [1].

End-to-end considerations

An end-to-end view of a system is important for the consideration of microservices – whether serverless or not. It usually doesn’t really help to look at just individual services or even individual implementation details of services: An overall view includes client applications as well as non-specialist services, such as authentication or notification services.

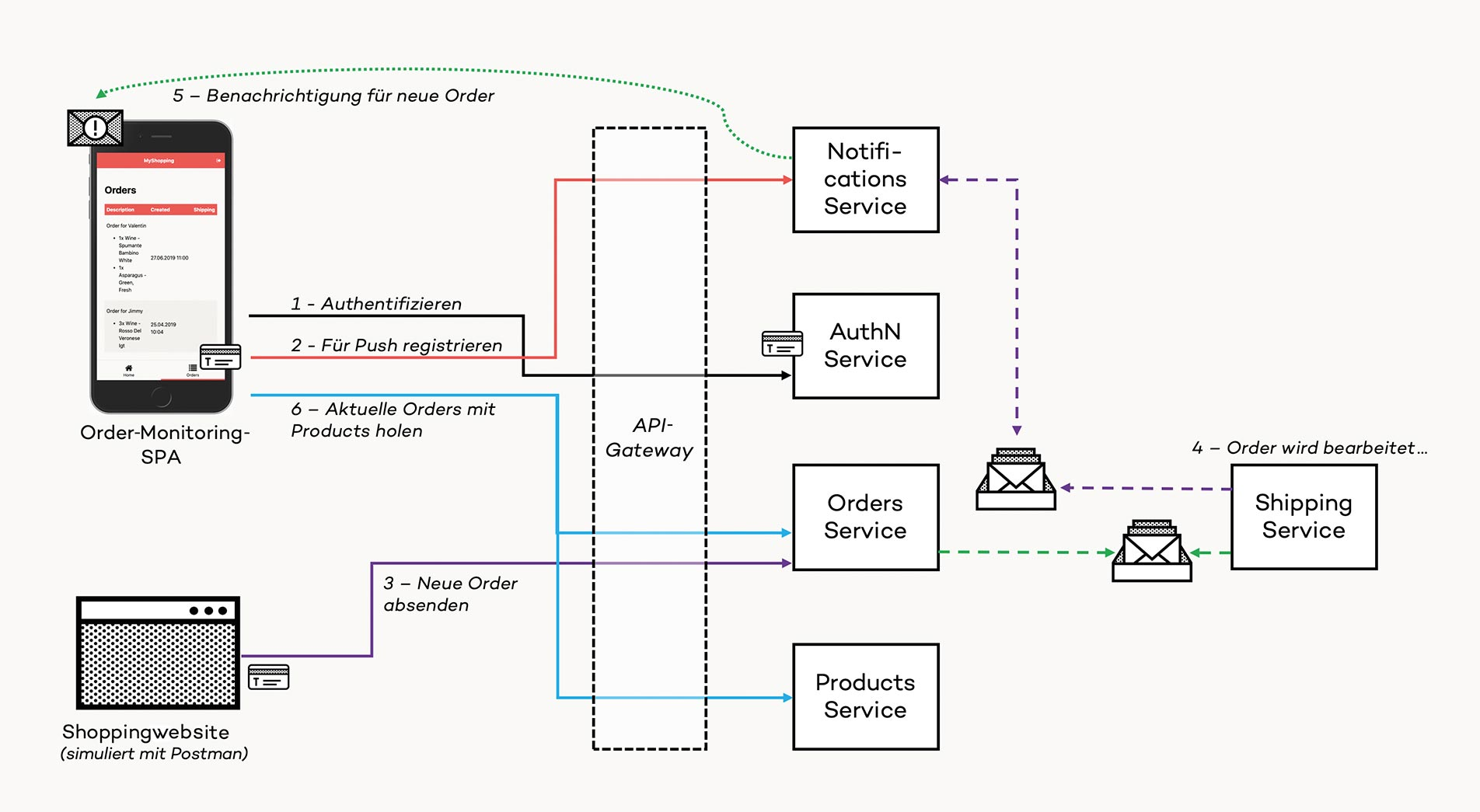

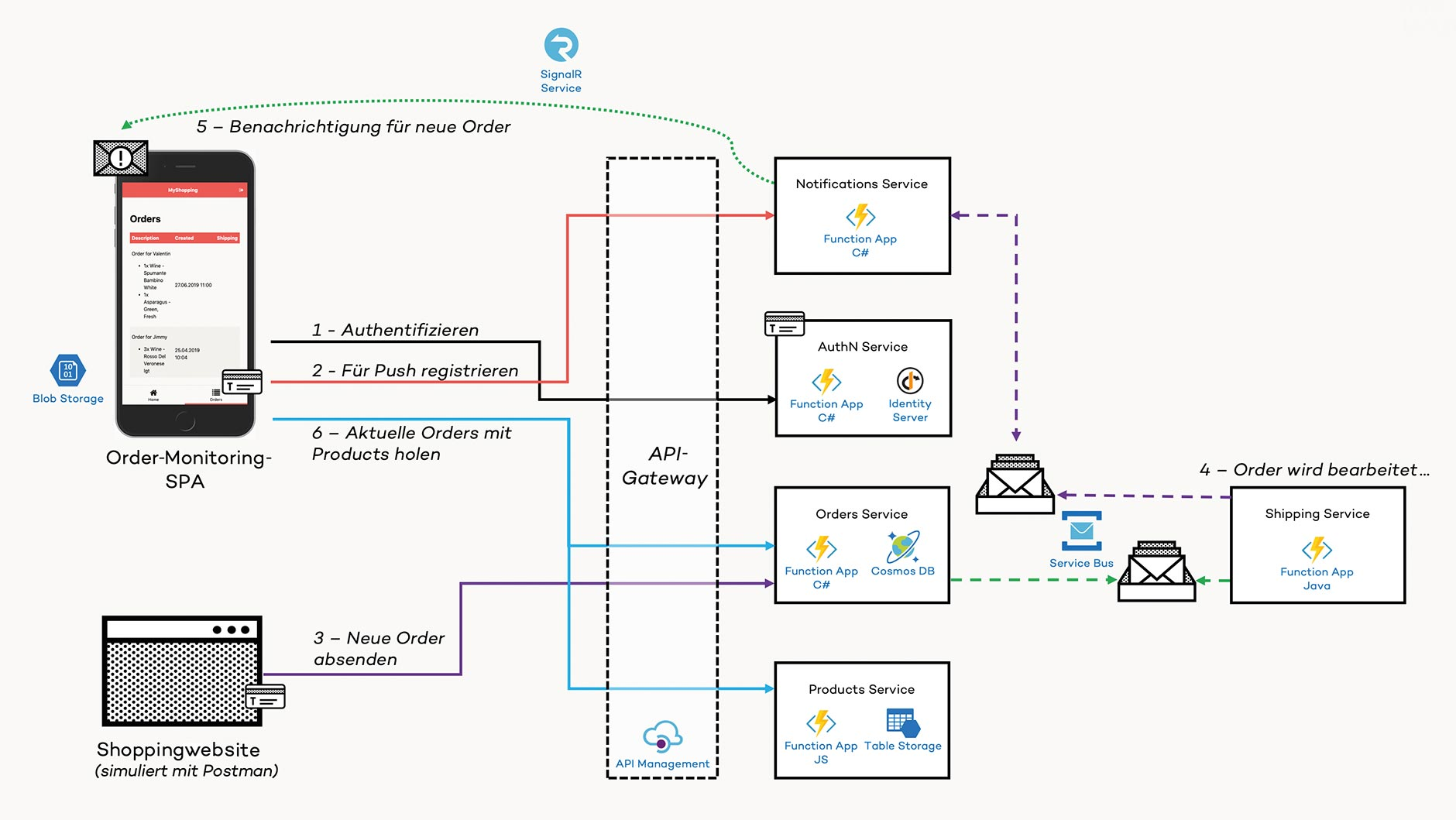

Our exemplary order monitoring system (Figure 1) – which was of course simplified and implemented for the purpose of this article – is quite simple in its basic concept: Whenever a registered user makes a purchase on the shopping website and places an order, we want to be notified by a mobile app – implemented as a cross-platform SPA – as an equally registered user. We can also use this SPA to view the current list of orders and their delivery status.

Fig. 1: Serverless microservices example architecture (order monitoring)

As can be seen in Figure 1, five microservices are involved here. The interactions can be traced via numbered steps. In this case, the client SPA is not implemented as a hodgepodge of microfrontends, which would go far beyond the scope of the example. Instead, the SPA uses the different APIs of the microservices. For a simplified URL management and a better decoupling of the physical services, an API gateway [2] is used in the overall architecture. Of course, an API gateway is not necessary in such an architecture, but it is often used for further abstraction.

The first question that arises when looking at the application from the end user’s point of view: Where does the SPA come from and how does it get into my browser?

Home for SPAs: Azure Blob Storage

At the end of the day, a SPA is a package consisting of HTML, CSS, images and other assets. You don’t have to deploy this package to a web server or even an application server in a complicated and expensive way. Every cloud platform offers the very slim and cost-effective option of simply providing the SPA as a static overall structure via a storage service. In the case of Microsoft’s cloud this is Azure Blob Storage.

Practical tip: Azure Portal, CLI and REST API

For all Azure Services, creation, and configuration can be configured and automated either via the Azure Portal, via a REST API or via the very powerful Azure CLI (Command Line Interface) [3].

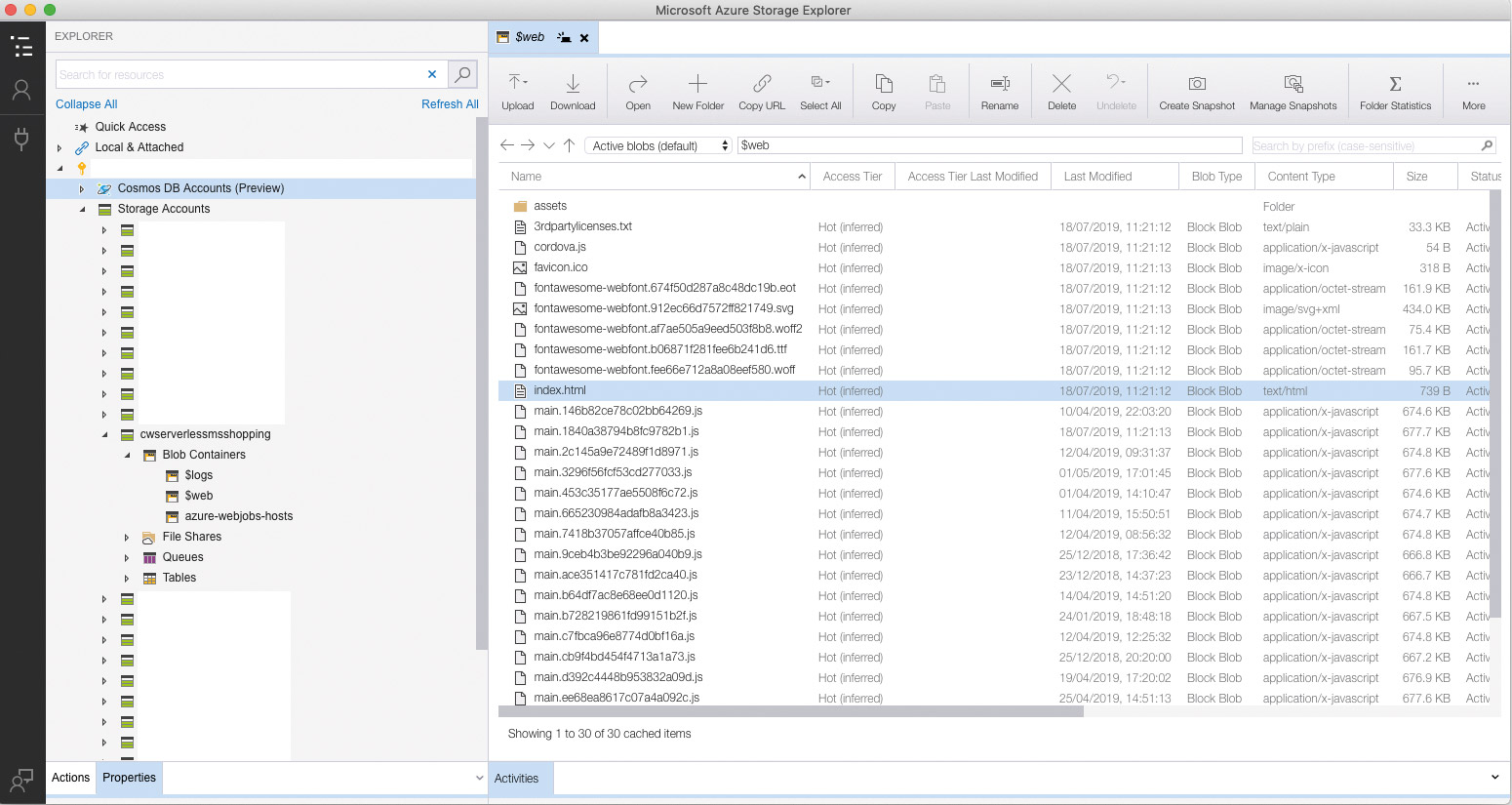

Once the storage account is created, you can enable the static website feature. After that a container named $web is available. This serves as a kind of root directory for a static web server, but is only provided by Blob Storage. Figure 2 shows the client SPA from the example, here deployed manually to the cloud using the Azure Storage Explorer [4] tool.

Fig. 2: Angular SPA provided via Azure Blob Storage

Of course, the endpoints for the blob storage-based web server are published via HTTPS. With additional Azure services such as Azure DNS and Azure CDN, it is also possible to establish your own domains, SSL certificates, and ultimately super fast CDN cache nodes. This is all done without having to touch or even know a single server.

So if the SPA is running in our browser via HTTPS and wants to retrieve data, then we need APIs and interfaces in our services. We will now implement and provide them serverless-like.

Microservice Code and Serverless – Azure Functions

Serverless code for your business logic or business processes can be executed via Azure Functions. Boris Wilhelms presents the basics of Azure Functions in his article on Azure Functions in this magazine (p. 40). Azure Functions can do even more than is presented there. An important feature is that you can not only write, upload and execute .NET Core and C# code – no, you can also use F#, Java, JavaScript, TypeScript, PowerShell, or Python. For details on the platforms and languages supported at the time of writing this article, see Table 1.

| Language | Release | Version |

| C# | Final | .NET Core 2.2 |

| F# | Final | .NET Core 2.2 |

| JavaScript | Final | Node 8 and 10 |

| TypeScript | Final | (Transpilation to JavaScript) |

| Java | Final | Java 8 |

| Python | Preview | Python 3.6 |

| PowerShell | Preview | PowerShell Core 6 |

Table 1: Platforms and languages supported in Azure Functions

Free choice of technology

The platform and language diversity in a microservices world should not be underestimated. Because as we have learned, the free choice of technologies that fit the problem at hand is a possible success factor. If, for example, you have a lot of Java code and want to take it with you into the serverless cloud, then simply do so with Azure Functions. Or if you want to implement algorithms in the Data Science Serverless environment, you can do so with Azure Functions Python Support. Or if you want to use the almost infinite universe of node modules, then write JavaScript or TypeScript code for Azure Functions.

Physical isolation

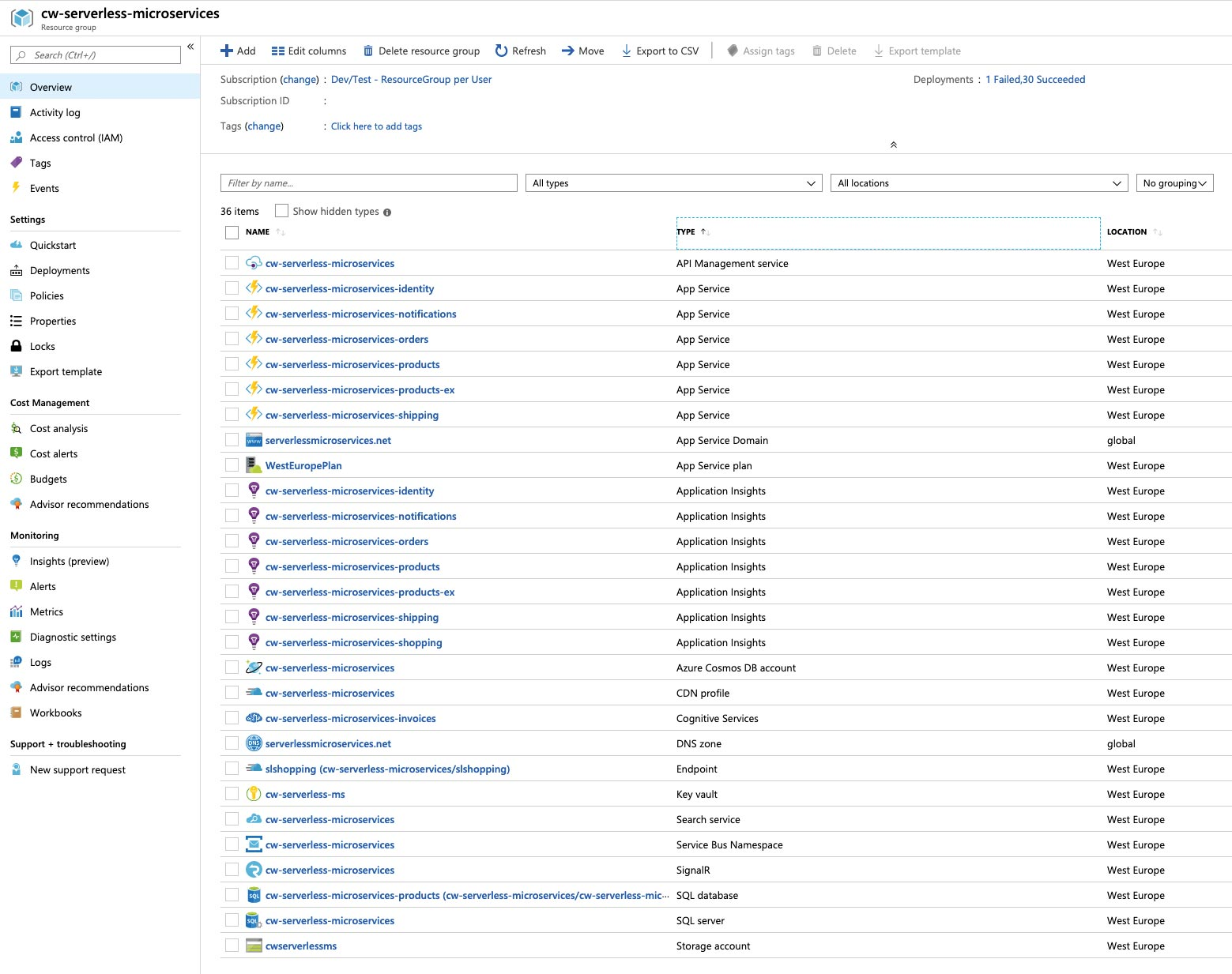

In order to ensure that each individual microservice really runs isolated from the others, functions are encapsulated in so-called Azure Functions apps. Figure 3 illustrates an Azure resource group for the entire serverless example application. All Azure resources involved are listed here. In total, there is much more than we can cover here in this article. The Azure functions apps (marked as App Service) for our microservices such as Identity, Notifications, Orders, Products, and Shipping (each with the naming scheme “cw-serverless-microservices-SERVICENAME”) play an important role.

Fig. 3: Azure resource group with, among others, individual Azure function apps for the respective microservices

Practical tip: Azure-Functions apps and functions

An Azure Functions app is based on the so-called Azure App Service, one of the classic PaaS offerings in Azure. A functions app is therefore a completely independent physical deployment. It contains the Azure Functions Runtime, always in a specific version and always for only one platform (i.e. .NET Core or Java or one of the other supported technologies). A classic microservice certainly consists of more than one function. Therefore, a Functions App as a collection container for any functions is a suitable container for serverless microservices.

We provide our microservices as a combination of functions in function apps. But how can the client SPA talk to our serverless microservices?

HTTPS APIs

As shown in the mentioned article by Boris Wilhelms, you can easily implement HTTPS APIs with Azure Functions. This is exactly what we do for our order monitoring system. The Orders Microservice has a function written in C# called SubmitNewOrder, which allows a client to send a new order via HTTPS POST. Listing 1 shows the complete code for this. The connection strings used in the example are not shown in the code but in the application settings and are not visible in the article. Especially the HttpTrigger for the POST operation in lines 5-7 should look familiar to the reader – if you have read the previously mentioned article.

Listing 1

public class SubmitNewOrderFunctions

{

[FunctionName("SubmitNewOrder")]

public async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Anonymous,

"POST", Route = "api/orders")]

HttpRequest req,

[ServiceBus("ordersforshipping", Connection="ServiceBus")]

IAsyncCollector<Messages.NewOrderMessage> messages,

ILogger log)

{

if (!await req.CheckAuthorization("api"))

{

return new UnauthorizedResult();

}

var newOrder = req.Deserialize<DTOs.Order>();

newOrder.Id = Guid.NewGuid();

newOrder.Created = DateTime.UtcNow;

var identity = Thread.CurrentPrincipal.Identity as ClaimsIdentity;

var userId = identity.Name;

var newOrderMessage = new Messages.NewOrderMessage

{

Order = Mapper.Map<Entities.Order>(newOrder),

UserId = userId

};

try

{

await messages.AddAsync(newOrderMessage);

await messages.FlushAsync();

}

catch (ServiceBusException sbx)

{

// TODO: retry policy & proper error handling

log.LogError(sbx, "Service Bus Error");

throw;

}

return new OkResult();

}

}

But what exactly happens at the beginning of the functional body in lines 14-17? The method CheckAuthorization on the req-parameter attracts our full attention here.

Security tokens for all: IdentityServer

In order to secure our microservices system at the API boundaries and also via the decoupled communication paths at the application level, we use JSON Web Tokens from the OAuth 2.0 and OIDC standards. Line 14 in Listing 1 looks at the HTTP request in the Azure Functions Pipeline to see if there is a valid JWT. The exact code can be found in the GitHub repository [1]. But where does this token originally come from?

One option in Azure for authenticating users and applications is Microsoft’s own Azure Active Directory (AAD) for internal users and their data, or alternatively AAD B2B for integrating external users. However, if you want more flexibility and full source code access in the form of an open source project, you can use the popular IdentityServer project [5]. IdentityServer is a framework, not a full-fledged server or even service. You adapt the basic functionality to your respective project and then host it in the cloud, for example, via Azure App Service.

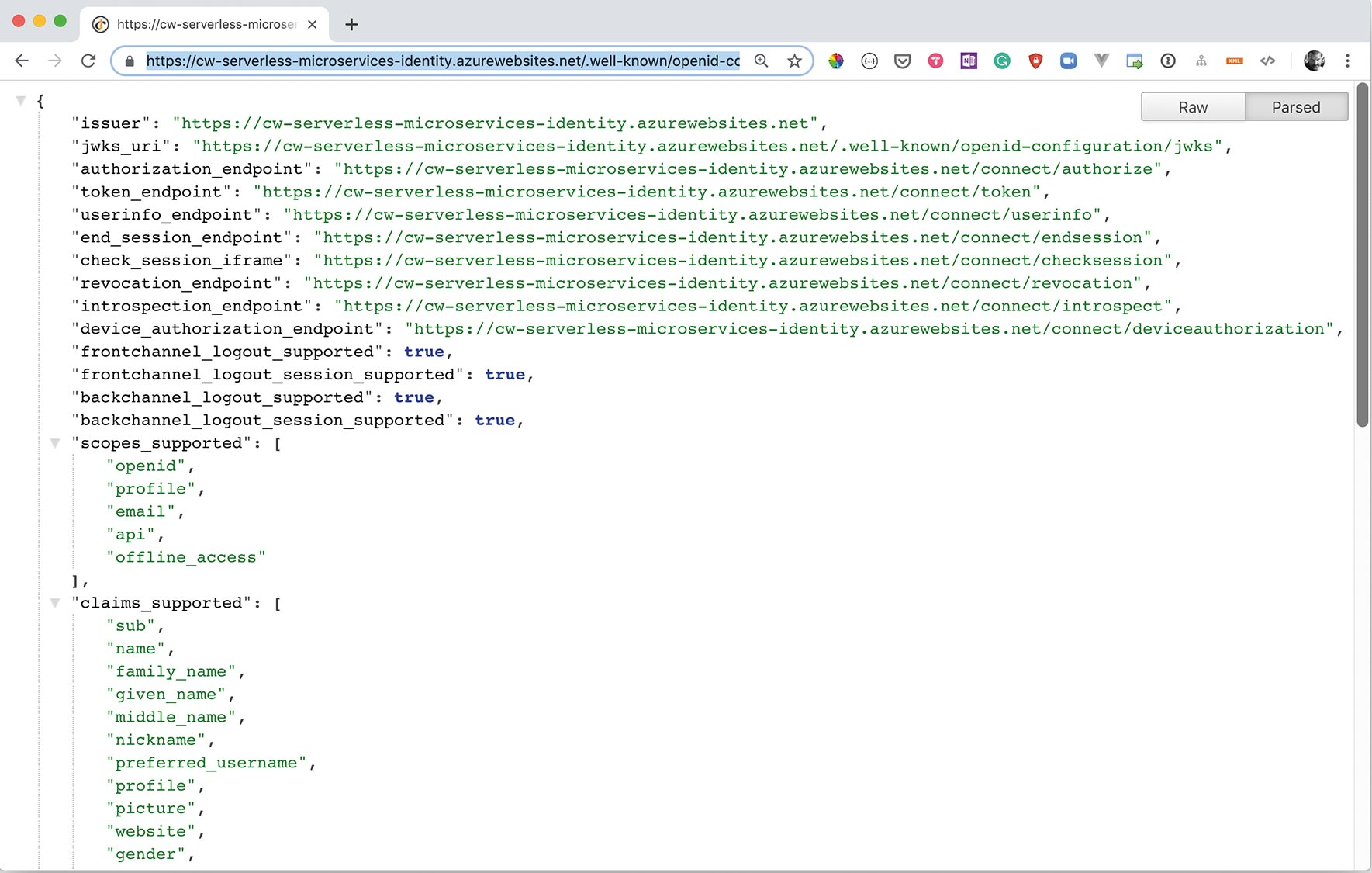

With a few tricks IdentityServer can also be deployed in Azure Functions – since it is a normal ASP.NET core MVC project [6]. Figure 4 shows an exemplary IdentityServer instance in a rather unspectacular way, but it is completely serverless via Azure Functions [7]. In other words: a wolf in sheep’s clothing.

One of the established methods is the Strangler Pattern, which Martin Fowler coined some years ago. From a certain point in time, it is a matter of no longer implementing new core functionalities in the old inflexible architectures, but of taking new paths. We achieve this by placing a kind of proxy between legacy application and user, implementing new functionality or services decoupled and connecting them to the proxy. In AWS you can use an API gateway and since one year also an Application Load Balancer (ALB) as proxy to implement the business logic with serverless managed services.

Fig. 4: IdentityServer hosted as a full OAuth 2.0 and OIDC framework in Azure Functions – serverless tokens, so to speak

Using the token issued by the IdentityServer, consuming clients or services can make requests to our Products or Orders Service, for example. These check the token to see whether it is from a known issuer and has not expired. In addition, each professional service can decide for itself whether further so-called claims must be checked in order to grant the caller access to the business logic. However, this token not only uses the front-end services directly addressed by the SPA. Information from the token is also used by the decoupled and asynchronously operating microservices such as the Shipping Service. But how does this frequently mentioned decoupling work?

Asynchronous decoupled communication: Azure Service Bus

We use a message queue system for decoupling on the time axis, that is, for asynchronous communication and thus, for the possibility of asynchronous code execution. In the case of Serverless Azure, this is the Azure Service Bus [8]. Service Bus is a very robust service in the Microsoft cloud, because it has been in existence for many years. For the purpose of interoperability, it uses the universally recognised Advanced Message Queuing Protocol (AMQP) version 1.0 [9]. In addition to simple message queues, the service bus can also be used to implement advanced patterns such as topics and subscriptions.

So that we can now separate the Orders Service from the Shipping Service in our example, the Orders Service places a message in a message queue called ordersforshipping. This can be seen clearly in the C# code in Listing 1 (lines 19-35). The API call messages.FlushAsync() instructs the service bus client library to send all messages accumulated in the code so far to the configured queue. In the best Azure Functions manner, messages are bound to a function output binding. This binding for the service bus is used asynchronously in the example: We therefore use an asynchronous API to exchange data in messages asynchronously. Makes sense.

A look at Figure 1 tells us that the shipping service can only be reached via this message queue and otherwise does not offer any API. This means that it can quietly execute its business logic for processing orders and handling deliveries. It also means that any results or technical responses to order processes are also formulated as messages and must be written to a new queue.

While the Orders Service was implemented in C# with .NET Core, the Shipping Service is a Java-based solution. This also shows us that Azure Functions really works across multiple platforms. Listing 2 shows all the splendor of simple Java code in a function with service bus trigger and service bus output binding. Using the trigger in line 11, the Java code in the message queue ordersforshipping listens. After the extremely complex code with all its complex business logic has been processed, a result message is generated, filled, and finally sent to the shippingsinitiated queue as a return value of the function (lines 19 to 31).

Listing 2

public class CreateShipment {

/**

* @throws InterruptedException

* @throws IOException

*/

@FunctionName("CreateShipment")

@ServiceBusQueueOutput(name = "$return",

queueName = "shippingsinitiated",

connection = "ServiceBus")

public String run(

@ServiceBusQueueTrigger(name = "message",

queueName = "ordersforshipping",

connection = "ServiceBus")

NewOrderMessage msg,

final ExecutionContext context

) throws InterruptedException, IOException {

// NOTE: Look at our complex business logic ;-)

// Yes - do the REAL STUFF here!

Thread.sleep(5000);

ShippingCreatedMessage shippingCreated =

new ShippingCreatedMessage();

shippingCreated.Id = UUID.randomUUID();

shippingCreated.Created = new Date();

shippingCreated.OrderId = msg.Order.Id;

shippingCreated.UserId = msg.UserId;

Gson gson = new GsonBuilder().setDateFormat(

"yyyy-MM-dd'T'HH:mm:ss.SSSSSS'Z'").create();

String shippingCreatedMessage = gson.toJson(shippingCreated);

return shippingCreatedMessage;

}

}

You can see and feel how much you can concentrate on the implementation of the actual logic with Azure Functions, its triggers and bindings, and how little you have to deal with the infrastructure. That’s how it should be, because we want to build software solutions, not infrastructure monsters.

The Shipping Service has done its duty and stored the information it needs in a message queue. The Orders Service doesn’t really care about this – but there is someone in the overall system who does care about this information – because the end user registered notifications about shipping news.

Web-based real-time notifications: Azure SignalR Service

Known through the push news from the mobile environment of our favorite apps, real-time notifications are becoming increasingly important in the business context as well. In the order monitoring system the SPA user would like to know if there is a new, successfully created order, especially if this order is being delivered.

For real-time notifications in the web environment, WebSockets is often used as the underlying technology at application level. However, WebSockets do not always work everywhere (browser versions, network proxies etc.). In addition, connection failures must be handled by the application itself and the actual application-specific protocol for data exchange must be defined and implemented each time. To make all these requirements a bit more manageable, there is SignalR from ASP. NET Core Team and the Signal Service [10] from the Azure Team. For SignalR there are client libraries for all possible platforms and programming languages, first and foremost of course JavaScript. We use these here in our SPA.

For our Azure Functions there are also bindings for the Azure SignalR Service. Who would have thought it? The Notifications Service (see Fig. 1) uses a service bus trigger to fetch messages from the shippingsinitiated queue (Listing 3). These messages are then prepared in the function to be sent to the previously registered clients via the SignalR Service using the SignalR Service output binding as shown in line 16. Done.

Via SignalR connection, the clients will receive (in most cases this will be physically a WebSocket Connection) information that there are new data/arguments for the destination shippingInitiated. The client can then decide how to handle this. In the SPA, an event is simply triggered locally and then the current list of orders with all product information is loaded from the respective services.

Listing 3

public class NotifyClientsAboutOrderShipmentFunctions

{

[FunctionName("NotifyClientsAboutOrderShipment")]

public async Task Run(

[ServiceBusTrigger("shippingsinitiated", Connection = "ServiceBus")]

ShippingCreatedMessage msg,

[SignalR(HubName="shippingsHub", ConnectionStringSetting="SignalR")]

IAsyncCollector&lt;SignalRMessage&gt; notificationMessages,

ILogger log)

{

var messageToNotify = new {

userId = message.UserId, orderId = message.OrderId };

await notificationMessages.AddAsync(new SignalRMessage

{

Target = "shippingInitiated",

Arguments = new[] { messageToNotify }

});

}

}

We have already achieved a lot with our idea of Serverless Microservices. And the nice thing about Serverless is that you can implement complex distributed architectures relatively quickly and easily. Here a service, there a service. Here a client, there a queue, there a notification service. But who keeps the overview? What happens where and how?

Learn more about Serverless Architecture ConferenceSTAY TUNED!

“I see something you don’t. – Azure Application Insights

Certainly, such a distributed and loosely coupled architecture does not only have advantages. The view of such a system at runtime is enormously important, for example, to be able to detect and react to errors. For Azure, we can use the Application Insights [11] in this case (these are currently being integrated into the so-called Azure Monitor).

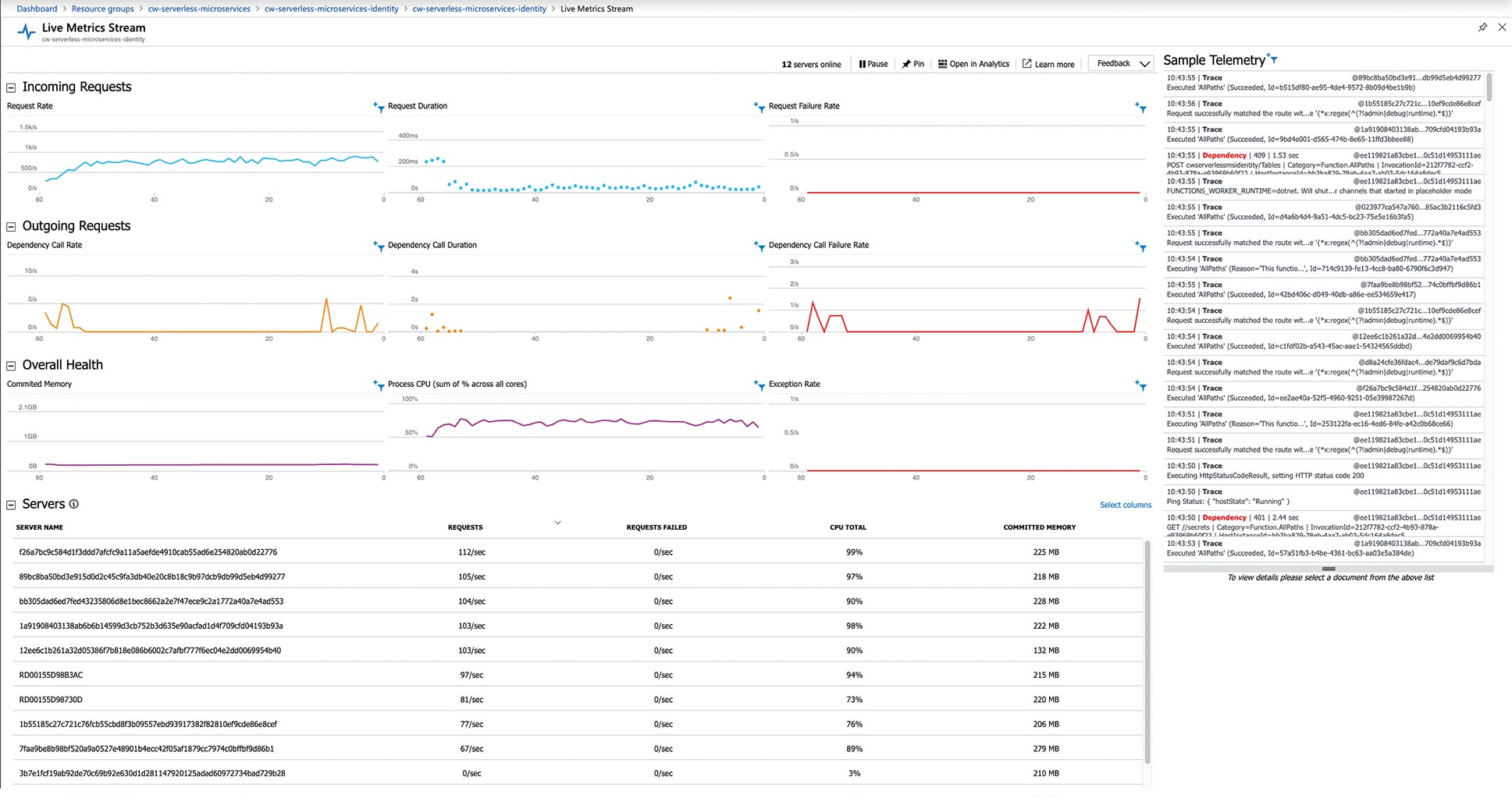

With Application Insights we can monitor the runtime behavior of our systems. For errors or threshold values that occur with specific metrics, we can also define rules for what should happen next – for example, sending emails or similar. In the context of our serverless sample application for this article, we will take a look at the current state of our Identity Service. Via the Live Metrics Stream we can actually look into the heart of our Identity Service in real time.

As you can see in Figure 5, we get insight into the telemetry data, requests, memory and CPU usage, and also the automatically managed number of server instances in our Serverless Azure Functions application. This info that should not really matter to us, since we explicitly don’t want to have anything to do with servers anymore. But it is quite interesting to observe how Azure Functions behaves under load. In the screenshot you can see that we process about 800-900 requests per second and that the Azure Functions Runtime or the Scale-Controller has meanwhile scaled up to twelve server instances – without any action on our part. When the load decreases, the Scale Controller will take care of the scale-in again. It is important to note that we only pay money if our code is actually executed, no matter how many servers are sitting around twiddling their thumbs in the meantime.

Fig. 5: Real-time insights into serverless microservices with Application Insights Live Metrics

End-to-end microservices with Serverless

If you take all the artifacts painted in Figure 1 and map them to Azure Services, you will arrive at Figure 6: The implementation of the serverless order monitoring system with Serverless Azure – voilà.

In the course of the article we looked together at how to implement end-to-end microservices in a pragmatic way with Serverless and the Azure Cloud. With Azure Functions and other matching Azure services, this can be achieved with relatively little effort in a short time. Of course, the devil is in the details, without question. And of course any presentation in the context of this article can only scratch the surface. Therefore, at the end of this article, we call on you to take a look at the example’s source code [1].

Fig. 6: Serverless microservices example – implemented with serverless azure services

Links & Literature

[1] Serverless-Microservices-Beispielanwendung: https://github.com/thinktecture/serverless-microservices/

[2] API-Gateway-Pattern: https://microservices.io/patterns/apigateway.html

[3] Azure CLI: https://docs.microsoft.com/en-us/cli/azure/?view=azure-cli-latest

[4] Azure Storage Explorer: https://azure.microsoft.com/en-us/features/storage-explorer/

[5] IdentityServer: https://github.com/IdentityServer/IdentityServer4/

[6] IdentityServer in Azure Functions: http://www.luckenuik.net/migrate-your-aspnet-core-based-identityserver-inside-azure-functions/

[7] Azure Functions: https://azure.microsoft.com/en-in/services/functions/

[8] Azure Service Bus: https://azure.microsoft.com/en-us/services/service-bus/

[9] AMQP Version 1.0: https://www.amqp.org/resources/specifications

[10] Azure SignalR Service: https://azure.microsoft.com/en-us/services/signalr-service/

[11] Azure Monitor (mit Application Insights): https://azure.microsoft.com/en-in/services/monitor/