Understanding Serverless

The term serverless encompasses technologies for running code, managing data, and integrating applications without managing any servers. Typically, serverless services adjust to demand automatically, without the need to manually increase or decrease the amount of provisioned resources. At the same time, the pay-for-use billing model means costs are aligned with actual demand, so you pay for value, not for unused capacity or idle resources.

Infrastructure management tasks, such as server and operating system maintenance, security isolation, high availability, application runtime updates, and logging, are handled by the serverless service. Because these tasks are included in the cost of the service, serverless architectures can lower an application’s overall Total Cost of Ownership (TCO).

Reducing time spent on infrastructure management also allows you to focus on activities that maximize business value for your own customers. By leveraging automation and reducing operational complexity as much as possible, serverless enables you to prioritize developing business logic, releasing features faster, building new products and services, and consequently winning more customers.

Amazon Web Services (AWS) offers serverless services in several categories. These include compute services, such as AWS Lambda for event-driven compute or AWS Fargate for serverless containers. Serverless application integration services such as Amazon Simple Notification Service (Amazon SNS), Amazon Simple Queue Service (Amazon SQS), or Amazon EventBridge make it easy to integrate and orchestrate different service building blocks into applications. Finally, services like Amazon Simple Storage Service (Amazon S3) and Amazon DynamoDB provide serverless data storage. For a more comprehensive overview of available serverless services, see Serverless on AWS.

Serverless compute with AWS Lambda

AWS Lambda lets you run code written in a variety of programming languages, such as Python, Java, or TypeScript, in a serverless compute environment without managing any infrastructure. You can author Lambda functions in your IDE of choice or in the AWS Management Console. To deploy a Lambda function, the code assets or compiled programs and their dependencies are packaged as a .zip archive or a container image. Typically, infrastructure as code (IaC) tools, such as the AWS Serverless Application Model (AWS SAM), AWS Cloud Development Kit (AWS CDK), Terraform, or others, handle the deployment for you.

Lambda is a great fit for a variety of use cases, including web applications and mobile app backends, file and stream processing, internet of things (IoT) backends, machine learning inference, IT automation tasks, and more.

AWS Lambda scaling and concurrency

Lambda is designed to scale automatically and seamlessly with the number of incoming requests. The Lambda service accomplishes this by increasing the number of concurrent executions of your functions.

When a Lambda function is invoked, the Lambda service creates an execution environment containing your chosen runtime, function code, and dependencies. It executes the function code to process the incoming event. Once the processing is complete, the execution environment remains active for a period of time, ready to handle additional events.

If new events arrive while the execution environment is busy, Lambda creates additional execution environments of the function to handle those requests simultaneously. Depending on factors such as the Lambda function’s size and configuration, new execution environments are created as fast as within double-digit milliseconds. This latency will be higher if a function is invoked immediately after it is created, updated, or if it has not been used recently.

By default, an AWS account can scale to up to 1,000 concurrent Lambda executions per AWS Region. Furthermore, the burst concurrency quota defines how fast Lambda functions can scale up. These quotas can be increased to meet the needs of your workload by requesting a limit increase through the Service Quotas Console or AWS Support.

To learn more about Lambda’s scaling behavior by example, see Understanding AWS Lambda scaling and throughput, the On-demand scaling example in the AWS Lambda Operator Guide, and this deep dive talk into how AWS Lambda works under the hood.

Billing, right-sizing, and CPU/memory interplay in Lambda

Lambda pricing has two components: the number of requests made to a function and the execution duration in GBs per second. This execution duration is metered in 1 millisecond increments multiplied by the function’s allocated memory. Thus, a function that runs for 100 ms with 2 GBs of memory allocated is billed at the same price as a function that runs for 200 ms with only 1 GB of allocated memory.

CPU resources are allocated to a Lambda function in proportion to the amount of memory selected. Adding more memory proportionally increases the amount of CPU and, thus, the overall computational power of the function. If a function is CPU-intensive, increasing its memory setting can improve its performance. With 1,769 MB of memory allocated, a function can access the equivalent of one vCPU. As the memory allocated increases, Lambda scales the number of vCPUs proportionally, i.e., 2 vCPUs for 3,538 MB, and up to 6 vCPUs at 10 GB.

Right-sizing the memory allocated to a Lambda function can result in cost savings and performance gains for your Lambda functions. AWS Lambda PowerTuning is an open-source tool that semi-automates function right-sizing by benchmarking the best combination of execution time and allocated memory against price.

Lambda invocation types

There are several ways to invoke Lambda functions; we’ll review them in this section, along with what you should be aware of for each invocation model.

Synchronous invocation

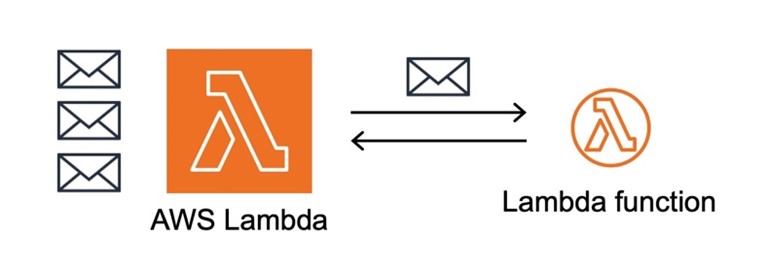

In a synchronous invocation, the caller expects and waits for a response before proceeding. Typically, the caller is blocked and remains idle until it receives the response. In addition, the caller is responsible for handling any errors and attempting any retries.

When invoking a Lambda function synchronously, the Lambda service executes the function immediately, sends one or more events to it, and waits for a response. When the function invocation completes, the Lambda service returns the response from the function to the caller. Synchronous invocations provide a simple and straightforward way to invoke and get a response from a Lambda function when a response is desired. A typical scenario is a REST API that uses HTTP as the underlying synchronous protocol for communication.

Figure 1: AWS Lambda synchronous invocation

Popular services that invoke Lambda functions synchronously are: Amazon API Gateway, Amazon Kinesis Data Firehose, and Elastic Load Balancing (Application Load Balancer).

Asynchronous invocation

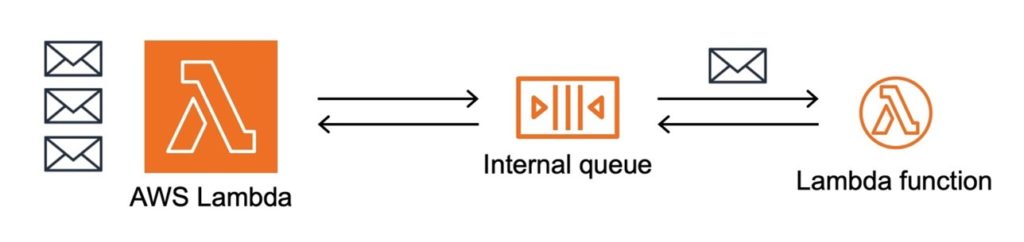

In scenarios where the work that needs to be done in a backend application may take a long time, such as seconds, or trigger a background process that takes minutes or hours to complete, an asynchronous integration is more appropriate.

In an asynchronous invocation, the caller returns and continues its execution flow after invoking the Lambda function. A response is either not expected or handled via a separate mechanism.

For asynchronous invocations, the Lambda service receives incoming events and places them on an internal queue. The service immediately returns a success response to the caller but does not wait for the function to execute. The event is retrieved from the queue as soon as possible, and the function is invoked. This internal queue allows Lambda to manage scaling and retries, and it can buffer events for up to 6 hours. If the execution of the function fails or times out, the Lambda service retries the function two more times.

Figure 2: AWS Lambda asynchronous invocation

One of the mechanisms that Lambda offers to handle execution status and asynchronous responses is Lambda destinations. Successful invocations, as well as failed ones can be routed as execution records to one of the following AWS services: another Lambda function, SNS, SQS, or EventBridge for further processing. For an in-depth discussion of Lambda destinations and their use cases, refer to the following AWS blog post.

Popular AWS services that invoke Lambda asynchronously include Amazon Simple Storage Service (Amazon S3) and Amazon SNS.

Event source mapping-based invocation

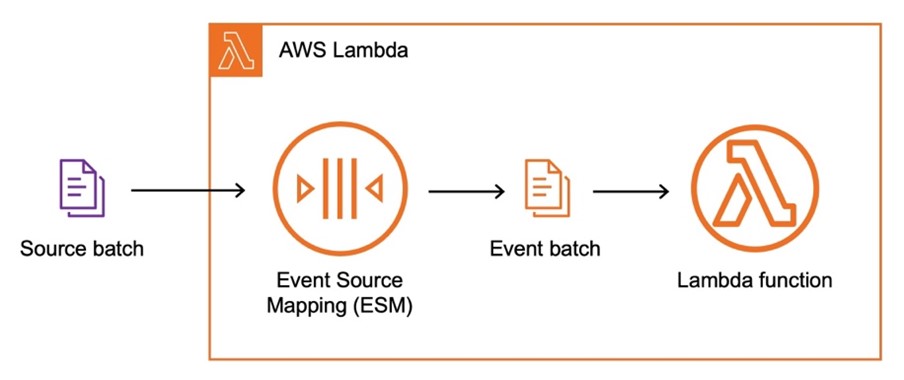

Event source mapping (ESM), also known as poll-based invocation, is an AWS Lambda invocation type specifically for integrating with messaging channels, such as streams and queues. With ESM, Lambda can, for example, read records from an Amazon Kinesis Data stream and invoke the function with those records as payload. The ESM is essentially a poller managed by the Lambda service, and its exact behavior and capabilities depend on the service used as a source.

With ESM, the Lambda service pulls messages from the source and then invokes the function synchronously. ESM-based invocations support batching records in the source into a single payload before invoking the function.

Popular services that Lambda can read events from via ESM include Amazon Kinesis Data Streams, Amazon SQS, and Amazon DynamoDB streams.

Figure 3: AWS Lambda event source mapping-based invocation

Learning by example

To illustrate the concepts introduced in the previous section, the following section presents two sample applications that you can deploy to your AWS account using the provided IaC templates.

The first example shows an asynchronous AWS Lambda invocation as part of a message processing application in which a Lambda function processes messages submitted to an Amazon SQS queue.

The second example includes a synchronously invoked RESTful API for analyzing the sentiment of text sent to an API endpoint.

To follow the below walkthroughs, you will need to create an AWS account. Be aware that by deploying the sample applications to your account, you will incur costs for some resources after the AWS Free Tier usage – consult the AWS Pricing page for details. After you finished the walkthrough, make sure to follow the clean-up instructions to delete all resources.

Deploying Lambda functions with AWS SAM

To deploy the sample applications, you can use the AWS Serverless Application Model (AWS SAM). AWS SAM is an open-source IaC tool for building serverless applications. It provides a shorthand syntax for expressing functions, APIs, databases, and event source mappings. With just a few lines per resource, you can define the application using YAML or JSON.

Complete the AWS SAM prerequisites and install the AWS SAM Command Line Interface (AWS SAM CLI) to deploy the IaC templates provided as part of this article.

AWS SAM is just one of several IaC tools available for developing serverless applications on AWS. Other options include the AWS CDK or third-party frameworks such as Terraform, the Serverless Framework, and SST.

Implementing a serverless message processing architecture

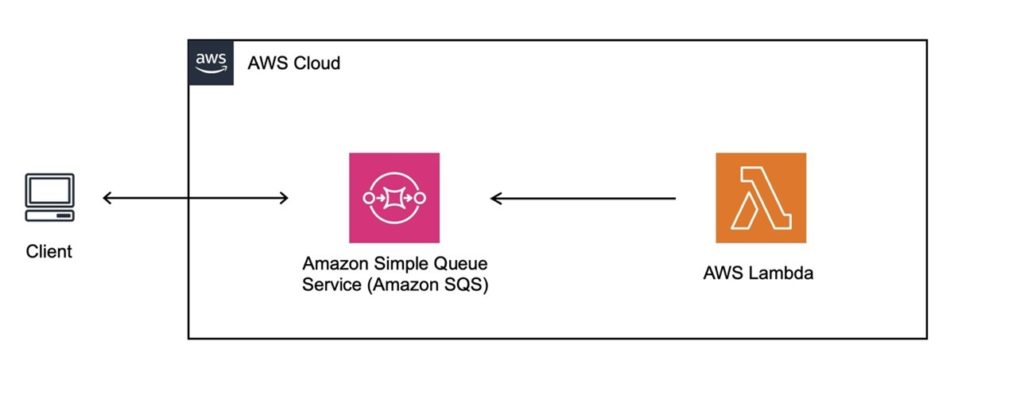

In this example, you will use Lambda as a consumer of messages in a messaging channel, in this case a serverless Amazon SQS queue.

A common use case for SQS queues is to decouple message producers and consumers in event-driven architectures, enabling asynchronous communication between two or more microservices in a distributed backend system. As discussed earlier, Amazon SQS is supported as a poll-based (ESM) source for Lambda, and hence, in this example, the Lambda service polls the queue. After reading from the queue and aggregating the messages in batches according to the configured batch size of 10, the Lambda ESM component invokes the function synchronously.

Figure 4: Serverless message processing architecture

While a batch of messages is being processed by an instance of the Lambda function, those messages remain temporarily hidden in the queue. No other instance of the function is able to receive them until the instance that is processing them has either completed or terminated with an error. This behavior is called the visibility timeout in SQS. SQS expects the consumer to delete messages from the queue when they are successfully processed. Hence, when the function has successfully processed a batch, the Lambda service deletes the messages from the queue. Thanks to ESM, there is no need to implement this logic in the function’s code.

In contrast, if the function encounters an error while processing the batch, all messages in that batch become visible again in the queue after the visibility timeout expires, and they can be picked up by Lambda’s ESM for re-processing, as per the configured MaximumRetryAttempts property. In order to prevent malformed records from repeatedly failing a batch and returning it to the queue indefinitely, configure a dead letter queue for the function to send the records that fail through the re-processing attempts.

Deploying the solution

Clone the following GitHub repository that contains the sample code.

git clone https://github.com/aws-samples/serverless-patterns/

Next, switch into the sqs-lambda directory and deploy the AWS SAM stack:

cd serverless-patterns/sqs-lambda sam deploy --guided

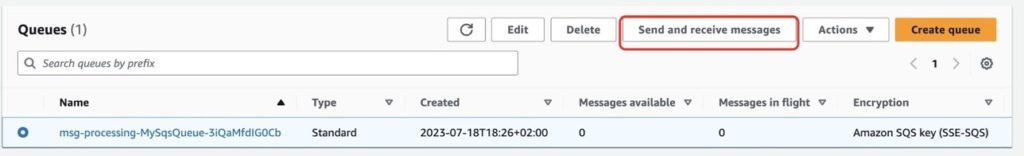

Accept the default values for each parameter. Once AWS SAM has successfully deployed the resources, you can send some messages to the SQS queue in order to trigger the Lambda function and test its functionality. You can do this via the AWS Management Console by navigating to Amazon SQS and choosing Queues from the navigation pane to display the Queues page.

The Queues page provides information about all of the queues in the active region. Select the queue recently deployed via AWS SAM and click on Send and receive messages.

Figure 5: The queues page lists all SQS queues in your AWS account in the active AWS Region

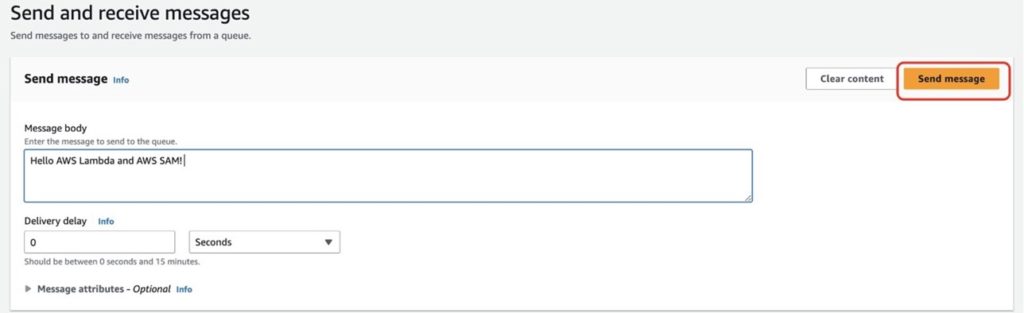

The console displays the Send and receive messages page. In the Message body, enter a message text and click Send message. You may do this a few times to send multiple messages to the queue.

Figure 6: Send a message to your SQS queue

Lambda is natively integrated with Amazon CloudWatch for logging and monitoring. Function logs and metrics are automatically sent to CloudWatch. Learn more about Lambda monitoring with CloudWatch and tracing with AWS X-Ray in the AWS Lambda Operator Guide.

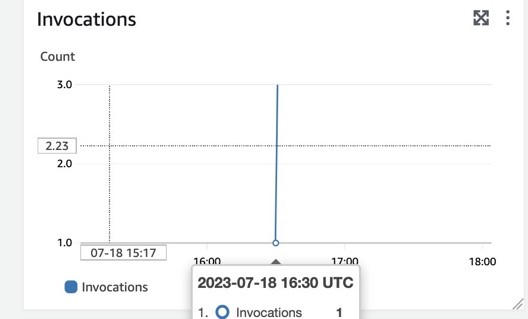

Once the message is delivered, you can verify the invocation of the Lambda function deployed through the AWS Lambda console under the Monitor/Metrics tab.

Figure 7: AWS Lambda invocation metrics are visible via the Monitor/Metrics tab

Additionally, the Lambda service will automatically send logs to Amazon CloudWatch for each invocation of the Lambda function, which can be inspected in the Lambda console Monitor/Logs tab or via the CloudWatch console.

A note on batch sizes: the Lambda service will poll the SQS queue and attempt to collect the number of messages specified in the ESM’s batch size, or up to 6MB, which is the maximum event payload size for a function invocation.

If there are fewer messages available in the queue, the Lambda service will invoke our function with all available messages. To avoid invoking the function with a small number of records, you can instruct the event source to buffer records for up to 5 minutes by configuring a batch window.

Cleaning up

To delete the resources deployed to your AWS account via AWS SAM, run the following command in the serverless-patterns/sqs-lambda directory and follow the provided instructions:

sam delete

Implementing a serverless sentiment detection architecture

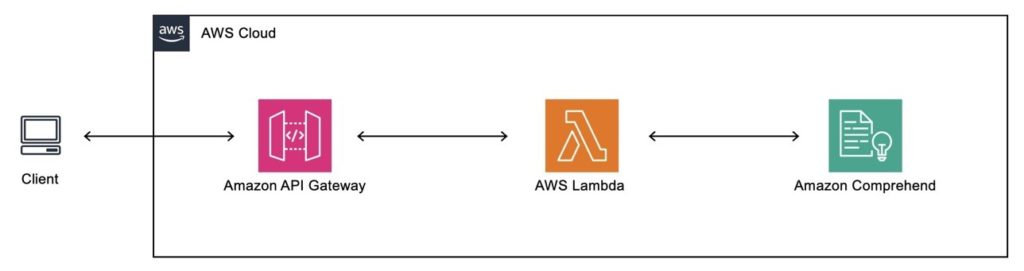

In this example, you will use AWS Lambda, Amazon API Gateway, and Amazon Comprehend to deploy an API that can analyze the sentiment of an input text. The API will return a synchronous response that contains information on whether the input text has a positive, negative, or neutral sentiment and the confidence level with which it makes this assessment. This functionality is implemented using the following architecture:

Figure 8: Serverless sentiment detection architecture

- An Amazon API Gateway REST API as the front door of the application. API Gateway provides authentication, caching, monitoring, and additional featuresthat enable you to build secure APIs at scale. API Gateway passes the incoming request from the client as input to the backend Lambda function.

- A Lambda function that receives the request and calls the Amazon Comprehend DetectSentiment

- Amazon Comprehend is a natural language processing (NLP) service that uses machine learning to detect key phrases, topics, sentiment, and more in documents.

For use cases that don’t require the advanced functionality of API Gateway, such as request validation, throttling, or custom authorizers, you can consider using Lambda function URLs. This can apply to webhook handlers, form validators, machine learning inference, and more. Familiarize yourself with the security and authentication model for Lambda function URLs to evaluate if they fit your use case.

Deploying the solution

Clone the following GitHub repository, which contains the sample code. If you have already cloned the repository as part of the serverless message processing example in the previous section, you can skip this step.

git clone https://github.com/aws-samples/serverless-patterns/

Next, switch into the apigw-lambda-comprehend-sam directory and deploy the AWS SAM stack:

cd serverless-patterns/apigw-lambda-comprehend-sam sam deploy --guided

Accept the default values for each parameter except when asked DetectSentimentLambdaFunction has no authentication. Is this okay? [y/N]. Here, for the purposes of this sample application, answer explicitly with y. As a result, anyone will be able to call this example REST API without any form of authentication. For production applications, you should enable authentication for the API Gateway using one of several available options and follow the API Gateway security best practices.

Once AWS SAM has successfully deployed the resources to your AWS account, it returns the endpoint URL for your API as an output:

CloudFormation outputs from deployed stack ---------------------------------------------------------------------------------------------------------------------------- Outputs ---------------------------------------------------------------------------------------------------------------------------- Key SentimentAnalysisAPI Description API Gateway endpoint URL for the dev stage of the Detect Sentiment API Value https://<API Gateway endpoint>.execute-api.<AWS Region>.amazonaws.com/dev/detect_sentiment/ ----------------------------------------------------------------------------------------------------------------------------

Testing the sentiment analysis API

Let’s test the deployed sentiment analysis API by providing an input with a positive sentiment. You can use curl to send an HTTPS POST request to the API. Make sure to replace the API endpoint URL with the one from your AWS SAM outputs:

curl -d '{"input": "I love strawberry cake!"}' -H 'Content-Type: application/json' https://<API Gateway endpoint>.execute-api.<AWS Region>.amazonaws.com/dev/detect_sentiment/

The API returns a response that contains the detected sentiment and a confidence score for all available sentiments:

{

"sentiment":"POSITIVE",

"confidence_scores":{

"Positive":0.9991476535797119,

"Negative":9.689308353699744e-05,

"Neutral":0.0006526037468574941,

"Mixed":0.00010290928184986115

}

}

Try testing the API with an input containing a negative sentiment and compare the results:

curl -d '{"input": "I hate chocolate cake!"}' -H 'Content-Type: application/json' https://<API Gateway endpoint>.execute-api.<AWS Region>.amazonaws.com/dev/detect_sentiment/

Cleaning up

To delete the resources deployed to your AWS account via AWS SAM, run the following command in the serverless-patterns/apigw-lambda-comprehend-sam directory and follow the provided instructions:

sam delete

Conclusion and where to go next

Serverless services, such as AWS Lambda, enable developers to build and run applications without needing to manage server infrastructure, and to focus on writing code that supports their desired business logic. This article presented the core concepts of AWS Lambda and two example applications that illustrate how easy it is to get started building with AWS Lambda. You learned how to integrate AWS Lambda with other AWS serverless services and how to implement and deploy functions via IaC tools such as AWS SAM.

To get more hands-on experience and continue building with AWS serverless services, navigate to the following resources. The self-paced online workshop Build, Secure, Manage Serverless Applications at Scale on AWS expands on the concepts introduced in this article by implementing an API-first serverless architecture and introduces new services such as AWS Step Functions.

The AWS Serverless Developer Experience workshop doubles down on development with AWS SAM as well as principles such as distributed event-driven architectures, messaging patterns, orchestration, and observability and how to apply them in your serverless projects. Familiarize yourself with these important concepts to get the most out of using AWS serverless services.

Finally, visit Serverless Land for the latest learning resources, blog posts, videos, and other content around Serverless on AWS.