When you think of the cloud, one name most likely comes to mind: Kubernetes. Especially in recent years, for many people, Kubernetes has become synonymous with the cloud. And they’re certainly not wrong. The automation tool named after the Greek helmsman was originally developed by Google for the deployment, scaling, and management of containerized applications and was donated to the Cloud Native Computing Foundation (CNCF). Its move into the open source community took the platform to the next level, with fantastic projects for serverless workloads like Knative. Kubernetes clusters seem to be a must-have in all good product portfolios, from American hyperscalers to up-and-coming German cloud companies. But the Kubernetes engine is by no means the only technology with a lot to offer as a cloud platform.

Learn more about Serverless Architecture ConferenceSTAY TUNED!

To have a look at the alternatives, let’s take a step back: What exactly is a cloud platform? In a nutshell, a cloud is a system that (ideally) provides self-service, shared, and scalable infrastructure resources over a network and charges by usage rate. The cloud platform is the technology that allows the provisioning and management of infrastructure resources. For instance, in the Kubernetes engine, the infrastructure resource is a virtual or physical server that allows containerized applications to run through a container runtime and orchestrates, scales, and repairs them, etc.

The problem with Kubernetes

The packaged applications run in pods, which are distributed on nodes—the provisioned servers. Individual or multiple nodes are organized into clusters, which are eventually provisioned and managed initially with the Kubernetes engine. This offers a plethora of configuration options for individual levels, which can be stored as code in YAML files. But don’t worry! Many configuration files can be reduced with Infrastructure as Code abstractions, like Terraform scripts or Helm Charts. If your head’s already spinning, rest assured, you’re not alone. Kubernetes has a steep learning curve. The platform’s high flexibility comes with a price. The ability to add a lot of details in many places also means that you’ll potentially have to screw them in. At any rate, you can quickly break something if you don’t understand its internal design.

The cloud is a crucial topic, especially in the DevOps movement. Combining development and operations is easiest when developers can organize the infrastructure for deploying their applications in self-service. But you should also consider the capacities of people involved in the development process. Ideally, the modern engineer should be able to master high-quality agile processes, TypeScript with Vue or React for the frontend, Java or Go for the backend, messaging services such as Kafka or RabbitMQ, relational and NoSQL databases, and at least three cloud platforms. This is difficult, especially with steep learning curves in each of these fields. Even worse, these steep learning curves are in development areas that don’t provide any added value themselves to the end customer, and so they have no business value. Application developers should be able to focus on application logic, without having to invest unnecessary time in Kubernetes training.

Cloud Foundry’s solution

This is where Cloud Foundry enters as an alternative platform. Cloud Foundry has a clear mission: simplifying cloud adoption. Onsi Fakhouri, who was the Vice President of Pivotal at the time (the company that transformed Cloud Foundry into the eponymous Cloud Foundry Foundation) once opened a presentation about the system with a haiku (Box: “Cloud Foundry Haiku) that sums up Cloud Foundry’s basic idea quite well.

With a focus on application development, the infrastructure details don’t matter. The requirement is simple: the application must run stably at all times. Cloud Foundry supplies load balancers and routes, creates service instances, and automatically connects these instances to the application environment. Since users aren’t interested in the details, many things can be abstracted and simplified by a standard configuration that’s as ideal as possible. Consequently, a whole list of automated processes from Cloud Foundry leads to a cloud service where customers just have to say: here is my source code.

Cloud Foundry Haiku

Here is my source code,

run it on the cloud for me,

I do not care how

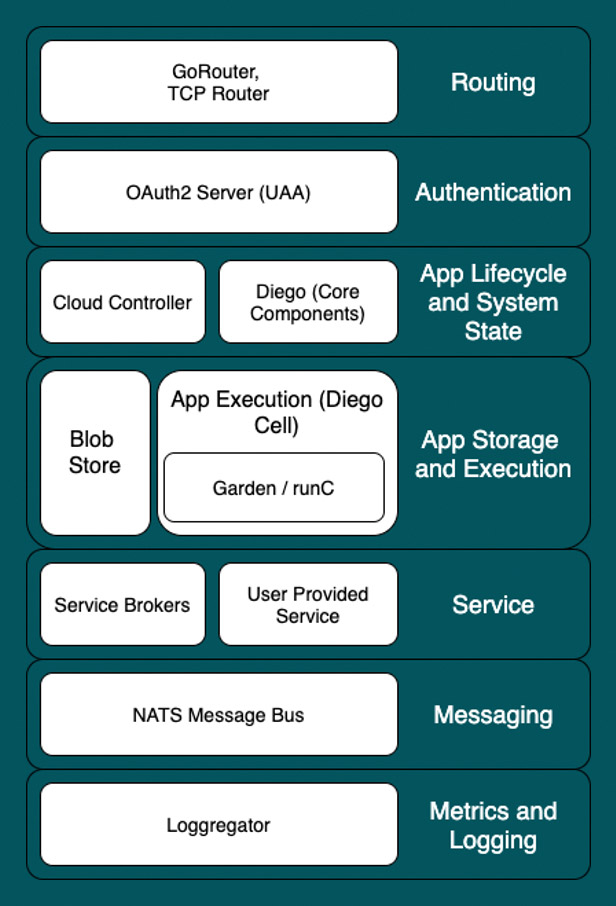

The inner life of a Cloud Foundry platform

Cloud Foundry does this with a long list of complex inner components. This could fill an entire article series of its own, so I recommend the book “Cloud Foundry – The Definite Guide” by Duncan C. E. Winn [1] for more details. Here, I’ll offer only a rough overview, starting with the system’s limits.

In general, Cloud Foundry can run on anything, even bare metal machines if necessary. But it’s highly recommended to abstract the virtualization of data center resources in an Infrastructure as a Service layer. There’s currently a strong movement in the Cloud Foundry open source community to integrate the Kubernetes engine into the platform through Helm Charts with projects like KubeCF or cf-for-k8s, however, the best-tested deployment variant to date relies on a component in the Cloud Foundry ecosystem: BOSH [2].

BOSH is an open source release management tool designed for installing Cloud Foundry, but it can also be used for other software. Similar to Helm Charts in Kubernetes, BOSH provides pre-configured platform releases that are rolled out and managed by the Bosh Director when executed. The release management provides pre-configurations of several IaaS environments such as Microsoft Azure, VMware vCloud, or even OpenStack.

Naturally, the most important resources from IaaS for Cloud Foundry are Compute Engines—for example, in OpenStack in the form of virtual machines (VMs) and storage in the form of volumes. The VMs are managed by Cloud Foundry using a system called Diego. In Diego Cells, a container runtime for the applications and other relevant components for management are provided with Garden. Access to the VMs and state management are abstracted with a cloud controller component. So that you only have access to your own applications, the cloud controller is connected to the User Account and Authentication Server (UAA), which offers extensive role and access management.

To access applications in the Diego Cells container runtime, you can request subdomains with a Go router or TCP router on a wildcard DNS record. The instantiation and connection of data services works thanks to service brokers and a built-in marketplace—which can include your own services. It can also be registered in another broker. In order for all of this to work, Cloud Foundry still needs its own message bus. So that you don’t lose track, a log aggregator handles aggregating the log outputs of different layers. Of course, it has restricted access and is managed with the roles and permissions in the UAA.

Fig. 1: Cloud Foundry’s component layers [1].

Aside from the basic components, additional extensions are also available. For example, Stratos [3] provides a UI for visualizing the Cloud Foundry API. Another example is the App Autoscaler component. It provides options for setting up rules for scaling application instances.

Of course, this brief overview of the Cloud Foundry stack includes far from everything the platform has to offer. But to get back to the idea of Cloud Foundry: Cloud Foundry’s inner components (Fig. 1) aren’t important for the application’s developers. Whether you use Diego or Kubernetes to manage containers, the main thing is that it works. The platform operator can worry about everything else.

The world of orgs and spaces

However, one thing is definitely of interest for deploying your own application on Cloud Foundry: user management of the UAA. That’s where Cloud Foundry implements another interesting concept with organizations and spaces.

In Cloud Foundry, spaces are virtual rooms that applications run in. A space can have several applications that share resources and access. Environment variables, like the injected connection credentials to the data services and the network are shared in a space. So, the number of applications in the space should be kept as small as possible.

In order to still be able to map complex systems, Cloud Foundry offers organizations—org for short. As with spaces, an org is not a physical unit, but simply a virtual area that manages several spaces. Cloud Foundry doesn’t make any specific demands about what an org and spaces must look like. For instance, an org can stand for an application while the spaces can stand for its development, QA, and production environment. Alternatively, an org can be an environment for a microservices system, while spaces represent individual microservices.

In any case, there are many options for managing orgs and spaces. For example, you can introduce a limit on available resources at both the org and space levels. Besides the org manager, there’s also space managers that only manage access to a space. Space developers have restrictions for managing spaces, but they can actively work with spaces using the Cloud Foundry API. Auditors at both levels have read-access to audit-relevant information. The UAA provides many useful roles out of the box and introduces custom roles through a fine-grained rights system.

From zero the cloud in five minutes

But enough theory! What does working with Cloud Foundry look like? Is it as easy to use as it promises? Fortunately, the open source community already provides conveniently prepared applications on GitHub as a showcase [4]. One of these applications is Spring Music, a simple Java Spring application that lists several music albums and their artists. The entire application’s code can be found on GitHub at [5]. The application will serve as our example.

Nothing else needs to be taken into account in the application. There’s only one special file in the repository: manifest.yml. You can see its contents in Listing 1, which records information about the application for Cloud Foundry. Besides the name, this defines a memory limit, the path to the application’s artifact, and environment variables. Additionally, the line

random-route: true

adds a random string to the automatically provisioned route. A route in the Cloud Foundry context is a subdomain in a wildcard DNS entry that is shared over all applications in the platform. Per default, the subdomain is just the application name, but this can be blocked by another application already using this route. Adding a random string to the application name for the route can therefore prevent this issue.

Listing 1 applications: - name: spring-music memory: 1G random-route: true path: build/libs/spring-music-1.0.ja env: JBP_CONFIG_SPRING_AUTO_RECONFIGURATION: '{enabled: false} SPRING_PROFILES_ACTIVE: http2 # JBP_CONFIG_OPEN_JDK_JRE: '{ jre: { version: 11.+ } }'

Next, we still need a Cloud Foundry org. Anynines offers a 30-day trial period for their Cloud Foundry-based Platform as a Service offering [6]. In this example, I used the service after registering for free.

The Cloud Foundry CLI (cf-cli) is also required to be able to interact with the Cloud Foundry organization. It can be downloaded and installed at [7]. After setting it up, it’s already enough to define the public Anynines Cloud Foundry API as a target.

$ cf login

Since the Anynines Cloud Foundry platform creates an org for every registered user, it’s immediately selected. Afterwards, you can select the space from a list of existing spaces in the org with the cf-cli prompt. Now, if you’re in the directory of the already-cloned Spring Music application in the console, the app just needs to be built with Gradle and passed to the Cloud Foundry using the following command:

$ cf push

The application name and many other configurations can also simply be set by parameters in the push command if you don’t want to use a manifest file. But in this case, with the configuration in the manifest file there are no parameters necessary.

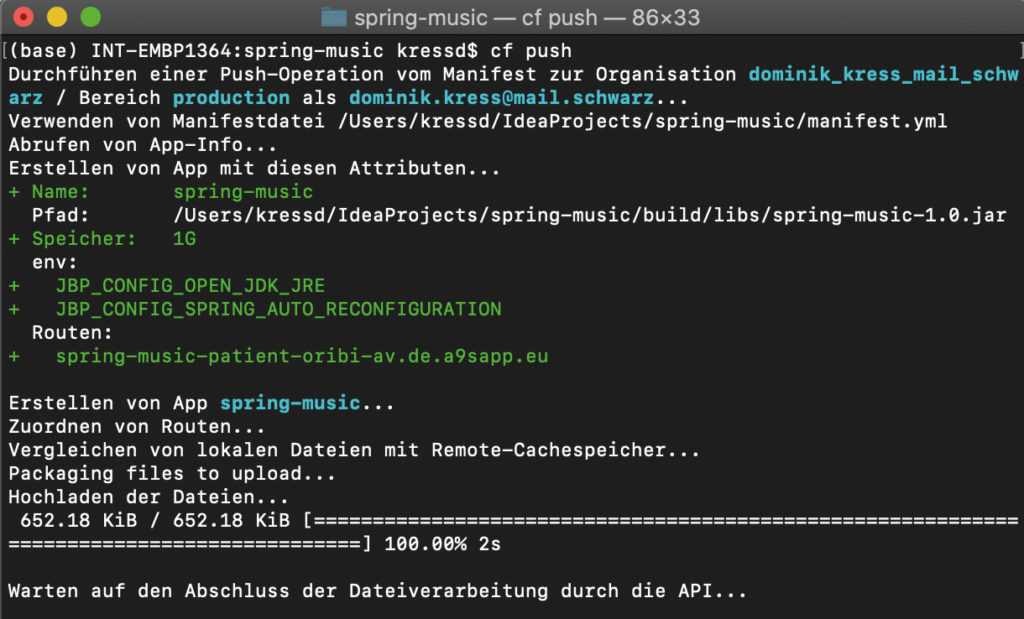

Fig. 2: Output after executing the $-cf-push command

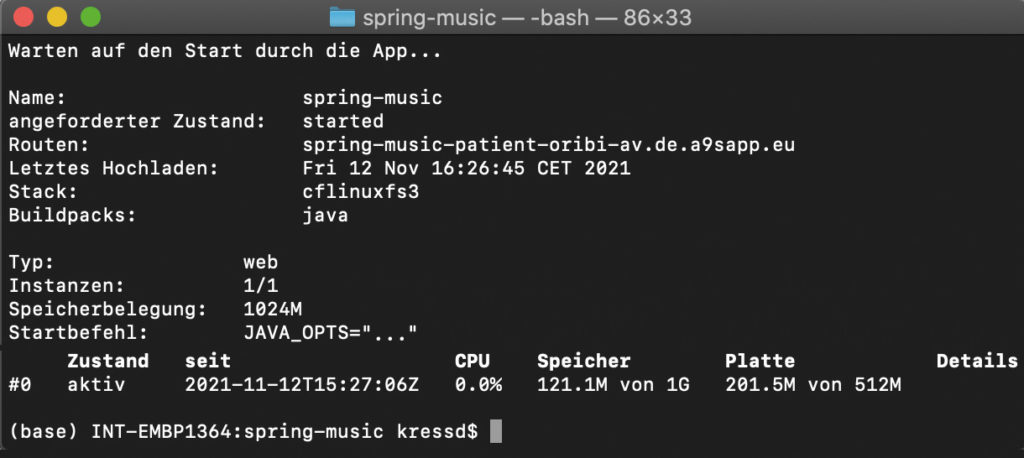

As you can see in Figure 2, Cloud Foundry recognizes the manifest file and creates an application in the selected space based on the information in it. When this application is created in the space, a route is already reserved, with which the application is available directly after provisioning is complete. After successfully uploading the artifact, a process called staging begins. Afterwards, the cf-ci waits for the application to start—as shown in Figure 3—and finally, outputs the container state if it’s successful.

Fig. 3: Output at the end of executing the $-cf-push command

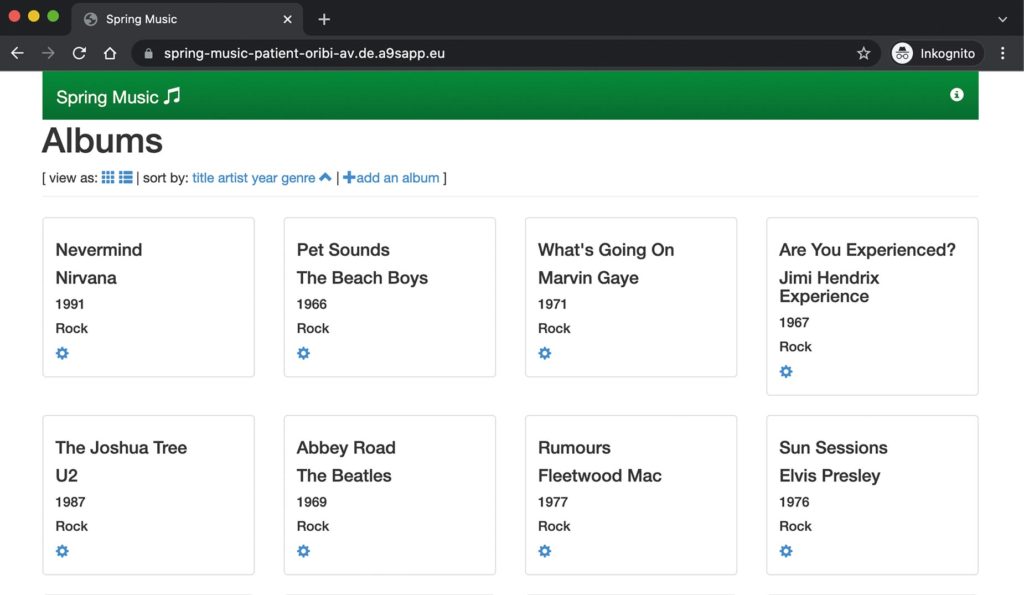

This means that the application is now successfully deployed to the Cloud Foundry and can be accessed under the reserved route, as seen in Figure 4. Impressively, this example shows that in just five minutes, without any complicated configurations, you can bring an application into a scaling cloud environment, start it, and call it with just one command.

Fig. 4: The Spring Music application under the reserved route

The magic of buildpacks

Some attentive readers might be confused right now: Didn’t you talk about a container runtime at the heart of Cloud Foundry? Then how could a Java artifact simply be “pushed” without a container in the example, but just with a reference to the .jar file? For the answer, the staging step in the push process comes into play. Behind this is another very interesting Cloud Foundry concept in the form of buildpacks.

Even though the platform easily accepts and processes Docker images or any kind of runC-compatible container images, the effort needed to containerize the application on the application development side goes against Cloud Foundry’s basic principle of abstracting away efforts for the infrastructure. So, Cloud Foundry offers an alternative for containerization with Docker files in the form of buildpacks. Every language and framework has its own buildpack.

Executing a buildpack is called staging. Every staging, and every buildpack, has three essential steps. The detect step checks if the buildpack is suitable for the application—it checks the .jar extension for the artifact currently in use. In the second step, compile, all application dependencies are collected and compiled. In our example, this includes the JVM and potentially all drivers for connections to the data services. In the third and last step, release, everything is prepared for executing the application. The output of a staging process is called a droplet. In conjunction with a filesystem, it can be executed on the Garden container runtime.

The Cloud Foundry community provides buildpacks for an enormous number of languages, frameworks, and other dependencies. Additionally, custom buildpacks can also be written and included in a cf push, if the platform operator allows it. This intuitive form of containerization already made it out of Cloud Foundry and into several other community discussion groups.

Learn more about Serverless Architecture ConferenceSTAY TUNED!

Conclusion

Cloud Foundry gives you a very easy entry into the cloud due to its high level of abstraction. For people in application development who prefer focusing on their applications rather than their infrastructure, it’s definitely a very good alternative. But if you already have experience with infrastructure and are highly motivated to optimize, it might be a little too limiting. However, with a view towards projects for better integration of Cloud Foundry on Kubernetes, this restriction could also be dropped, as the underlying configurations of Kubernetes clusters can be passed onto the users.

In summary, I can definitely say that the cloud has many faces. Cloud Foundry will certainly remain one of them for a long time to come.

Links & Literature

[1] Winn, Duncan C. E.: “Cloud Foundry: The Definitive Guide”, O’Reilly Media, 2017

[2] BOSH Release Management: https://bosh.io/docs/

[3] Stratos UI: https://stratos.app

[4] CF sample applications: https://github.com/cloudfoundry-samples

[5] Spring Music sample application: https://github.com/cloudfoundry-samples/spring-music

[6] Anynines Cloud Foundry: https://paas.anynines.com

[7] Cloud Foundry CLI: https://docs.cloudfoundry.org/cf-cli/